Executive summary

Based on a multi‑dimensional analysis of developer activity, user metrics and builder sentiment, the report concludes that Arbitrum is losing momentum to Base and Solana in attracting small, experimental teams while remaining a strong venue for institutional DeFi projects. We compared chains using Electric Capital data on open‑source repositories and active developers, verified‑contract counts, daily active users (DAU/MAU), throughput and capital flows, complemented by a survey of 22 builders and a post‑mortem on a hackathon continuation program.

The metrics consistently favoured Base and Solana. Electric Capital’s taxonomy shows Base and Solana still growing linearly in repository count while Arbitrum and Optimism exhibit an S‑curve plateau; Base has already surpassed Arbitrum’s repository count despite being live for a shorter time. Full‑time and established developer counts have risen 40 % on Base but fallen 34 % on Arbitrum. Verified contract deployments, though noisy, further underscore the divergence: Base routinely outpaces Arbitrum and Optimism due to programmes like Onchain Summer and getting structural support from the leading Centralized exchange, Coinbase, whereas Arbitrum’s daily verified contracts have been trending down since 2024.

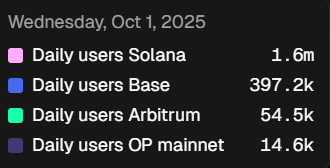

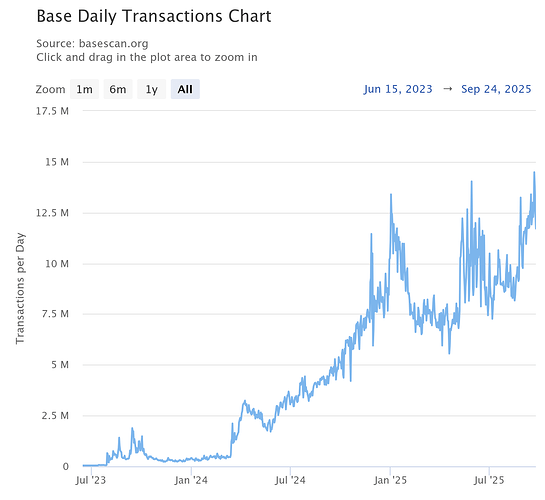

On the user side, Solana averages about 1.5 m daily active addresses, double Base’s and roughly five times Arbitrum’s ≈300 k. Base also leads the EVM L2s in transaction counts and throughput growth, aided by deliberate engineering choices to scale to 250 Mgas/s by late 2025, whereas Arbitrum and Optimism lag on throughput. Arbitrum still records the largest capital flows because of its DeFi focus, but much of the inflow is attributable to bridging to a single venue, Hyperliquid. In account‑abstracted wallet adoption, Base again dominates, reflecting Coinbase’s consumer‑oriented tooling.

A comparative look at developer‑relations efforts shows why momentum diverges. Base leverages Coinbase’s reach through reward‑driven campaigns, identity services and mobile‑oriented SDKs. Solana combines high‑performance tooling with extensive education programs and AI‑assisted support. Arbitrum’s program still centres on grants and an incubator, with a growing focus on Rust/C/C++ support (Stylus), and Optimism emphasises retroactive public‑goods funding. Survey responses highlight that liquidity and business‑development support drive chain selection, while grant availability ranked last among launch drivers. Respondents valued co‑marketing and portal placement over small grants and often perceived Arbitrum chiefly as a DeFi chain or were unaware of its grant programme. Interest in structured incubation scored positively, suggesting that better advisory services could help projects meet feasibility and sustainability criteria. A separate hackathon pilot underscored that low marketing budgets and a hackathon‑first sourcing model attract low‑commitment teams; the report advocates larger initial investments ($100–150 k), research‑driven RFPs and better community building.

In sum, Base and Solana currently offer the most vibrant environments for small-team experimentation across both developer and user metrics, while Arbitrum remains a DeFi-centric hub with less traction among new builders. To reverse this trend, we recommend that Arbitrum invest in user-experience improvements, expand developer outreach beyond DeFi, increase or improve the grant program and marketing spend, and provide structured incubation and business-development support to help small teams convert ideas into sustainable deployments.

Builder Momentum: Comparable Proxies

To understand the builder momentum on different chains we used different proxies to try to gauge the general activity of the last two years. The chains we decided to compare to were Base chain, Optimism, and to a lesser extent Solana due to lack of verifiable data.

With the comparison we looked at the number of open source protocols deployed on a chain overtime (Crypto ecosystems), active developers (Developerreport), and verified contracts (respective to their own scan sites).

We want to caveat that the usage of verified contracts can be noisy, protocols like Manifold, Zora, Farcaster, Clanker, etc do add a lot of verified contracts to Base. But, all the different metrics combined paints a picture of where builders are generally gravitating towards.

Protocols deployed overtime

This metric is being maintained by Electric Capital. It is a taxonomy of open source repositories from blockchain, cryptography, and decentralized ecosystem. The charts show the number of ecosystem and repository growths over time. Arbitrum and Optimism are showing an S curve in growth, whereas Solana and Base are still growing somewhat linearly. Remarkably Base has outpaced the current repos on Arbitrum even after being live for a shorter amount of time; at the same time we could argue that the growth chart of Arbitrum is more organic, due to the nature of a central entity, Coinbase, behind Base that can push for specific spikes in metric.

NB: The dip and recovery in repos are due to a cleanup of old/multichain repos done by Electric capital.

Ecosystems

High-level categories like Bitcoin or Ethereum that describe communities or projects. They form the structure of the taxonomy rather than pointing to specific codebases.

Repositories

GitHub projects such as GitHub - OffchainLabs/arbitrum that are attached to exactly one ecosystem. They supply the actual code content that fills the ecosystem structure.

Active developers

Over the last two years the number of total developers, on all chains and in crypto in general, has decreased by 4%. Interestingly the full time developers metric has increased by 9%, but the part time developer has sharply decreased. When looking at each ecosystem respectively, we see that Base has grown significantly, up 40%, even overtaking Arbitrum in both full-time and established developers. Both Arbitrum and Optimism saw double digit % in decrease, with Arbitrum losing a staggering amount of 34% and optimism 18%. Solana on the other hand, is the big winner, with the highest number of full-time devs, and an increase of 62%.

Electric Capital Methodology

NB: Full time devs are developers who commit code 10+ days out of a month.

Verified contracts

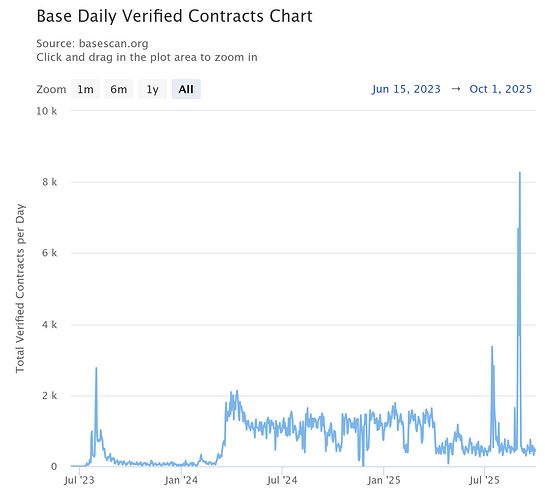

What we see with this metric is that even though Arbitrum and Optimism are much older (2021), Base outlaps both in total contracts deployed, total contracts verified, and 24h contracts deployed (and verified). There could be multiple reasons as to why this is happening, we already named a few reasons, Clanker 4.0 on base for example has deployed over 107K verified contracts in 103 days, V3 did 140K, V2 44K, V1 11K.

There are multiple spikes of daily verified contracts (July 14th and September 2nd) on Base which could be attributed by the start and end of “Base Onchain Summer” which awarded $250,000 in total prizes for top apps, breakout new apps, and other categories.

Both Arbitrum and Optimism are showing a downward trend in daily verified contracts since 2024. As mentioned above, verified contracts in isolation doesn’t paint a full picture, as it can be noisy, but it does signal that there is activity on the chain which could persuade developers to launch there.

Source: Arbiscan.com

Source: Basescan.com

Source: optimistic.etherscan.io

As briefly mentioned above, the verified contracts are not a reliable metric that can be taken in isolation to draw strong conclusions; despite this, it is indeed an objective data point. Even by filtering out specific events like Base summer or the announcement of a possible token that for sure push for outlier activity, we do have a picture for which Arbitrum contracts are either flat or negative growing year over year, and Base still see events that, while could be framed as “inorganic”, spur the activity of the developers.

Snapshot most recent verified contracts

The snapshot is not indicative of what kind of contracts are being verified or deployed, but along other signals it does aligned with the idea that most of the contracts being deployed on Base are tokens (nearly 50% of the page), whereas Arbitrum has generalized Defi tone to the verified deployments.

Arbitrum

Base

Optimism

Conclusion for Builders

Small-team momentum and contract deployment velocity appear strongest on Base right now. While Arbitrum’s headline protocols remain strong, its small-builder on-ramp looks thinner with fewer active devs than a year ago. While these metrics aren’t conclusive of one ecosystem being better than the other for smaller builders, it does show that builders are going more gravitating towards Base. Information on verified contract deployments, or programs, on Solana isn’t aggregated, but the crypto-ecosystem repos show that it is the biggest ecosystem for builders, went counting repos.

The take-away is that Base currently has more momentum, but Solana still offers a larger ecosystem; Arbitrum is strong for institutional DeFi, but doesn’t carry the same strength for mid to small projects and builders. However, deployments are meaningful only when matched by active users and throughput which we will explore in the following section.

User Metrics

On-chain metrics are important for developers as it can tell them whether it makes sense to deploy on a certain chain. The metrics that matter are the number of users on a chain, whether the chain is attracting /transacting value (flows/TVL), how much throughput (gas/s) a chain has, and what native support developers can expect.

DAU/MAU

Active addresses are commonly used to gauge engagement on a blockchain, though the metric isn’t perfect since one person can have multiple addresses. While it gives an overall sense of network activity, it can be inflated through Sybil attacks, where many fake addresses are created to skew the data. Especially with users trying to farm certain networks in hope for an airdrop.

The data suggests that Solana still is the active chain for users, with double the amount of Base at 1.5m, Arbitrum has 20% of the users at 300k, and Optisim is much worse at less than 100K.

Source: Growthepie.com

Source: tokenterminal.com/

TX counts/fees/gas usage

TX counts

The total number of transactions on a blockchain is a useful measure of activity, but it doesn’t tell the whole story. A network with fewer transactions might still be moving far more value because it’s heavily used for high‑value DeFi trades, while a chain supporting gaming or other low‑value use cases could see a high transaction count with relatively small amounts of capital involved. Therefore, transaction volume should be considered alongside the types and value of transactions to understand actual usage. Base is leading in user activity here when ignoring Solana.

Source: Growthepie.com

Source: tokenterminal.com

NB: Source on voting/raw transactions solanacompass.com

While hereby provided for the sake of completeness, we don’t think including Solana’s transactions is a useful metric: it is much harder to gauge as it is inflated by validators reaching consensus by voting on chain (80%-90% of transactions are usually voting) and the chain being cheaper makes it prone to spam. But nonetheless, with those considerations the transaction count is severely higher than Base, at over 100 million daily transactions.

Gas usage

Building on transaction count we can look at Gas usage or throughput which is a more precise indicator of scalability because it measures the network’s overall compute capacity rather than simply counting transactions, which can vary greatly in complexity, from roughly 21,000 gas for a basic ETH transfer to around 280,000 gas for even a simple Uniswap swap. Throughput directly reflects how much work a blockchain can process and how close it is to its performance limits. For app developers and Layer 2 teams, this metric is indispensable for gauging growth potential, estimating costs, and understanding where bottlenecks might emerge.

It is clear that Base’s throughput is ever increasing, which started in February, the moment when Base focused on scaling the chain, with a north start of reaching 1 Ggas/s at is poised to achieve 250 Mgas/s by end of 2025. Both Arbitrum and Optimism have been lagging behind in throughput, this could be a reason for developers to deploy on Base rather than Arbitrum or Optimism due to lack of throughput.

Source: Growthepie.com

Is interesting to notice how Base’s engineers realised that running with a 35 Mgas/s target was creating problems: actual demand was lower, so fees stayed too cheap, the network attracted spam and fee spikes were poorly managed, forcing users to pay extra priority fees. They also found that at this level the rollup was brushing up against Geth performance limits and Ethereum’s data‑availability capacity. To fix this, they deliberately lowered the gas target to 25 Mgas/s and raised the gas limit to 75 Mgas/s, increasing the elasticity ratio; this change makes fees more responsive during congestion, discourages spam and priority‑fee bidding, and keeps node operators and L1 resources within safe bounds. Read more here.

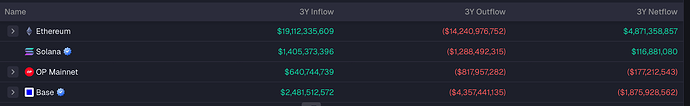

Flows (bridge net flows)

As for value flows, the net flow of all chains, besides Solana, are actually down. Interestingly Arbitrum has the biggest flows, which is reasonable for being known as the DeFi chain, but what it is glaring is that more than half of the inflow is due to users bridging to Hyperliquid (Net flow of $-5.9B, with inflows $42B, outflows $48B). For Base the main netflow is -$4.7B to ETH. For Optimism, the netflows are negligible compared to the other two. What is interesting for Optimism, however, is that the biggest inflows were from now considered ‘dead chains’, Zksync($128M), Blast($101M), Scroll ($57M), Mode ($32M). Finally, the biggest net outflow for Solana is to Arbitrum ($214M).

Source: Artemisanalytics.com

Account Abstracted wallets (ERC-4337 // EIP-7702 )

A different interesting metric to look at is the number of Smart accounts on each of the chains. This metric can signal what kind of user there is on the chain. A high amount of smart accounts generally indicates a better UX for the user, as a lot of the complex activities are being abstracted away for the user. This could influence a developers decision to deploy on certain chains.Across all the metrics, Base, again, is winning. They have the most monthly active smart accounts, highest number of successful UserOps (proxy for transactions), and are earning the most fees. The numbers can be explained by the coinbase’s Base Account (previously, Smart Wallet) which significantly simplifies the developer experience of integrating wallets into dApps.

As this is EVM related, Solana is ignored.

Source: dune.com/niftytable

Additional information:

https://www.bundlebear.com/eip7702-overview/all

Summary user surface

Across all user‑side indicators,active addresses, transaction throughput and smart‑account adoption, Base and Solana clearly outshine Arbitrum and Optimism. Solana has roughly double the active users of Base (about 1.5 million daily addresses), and Base itself surpasses Arbitrum (≈300 k) and Optimism (<100 k). Base also leads the EVM L2s in transaction counts and throughput growth, while Arbitrum and Optimism have lagged, which may deter developers seeking high‑capacity environments.

In flows, Arbitrum still attracts substantial capital because of its DeFi focus, but much of the inflow is driven by bridging to a single venue (Hyperliquid).

Finally, Base has the largest number of active smart‑account wallets and user operations, a reflection of Coinbase’s consumer‑oriented tooling. Taken together, these metrics paint a consistent picture: Base (and Solana) offer the most vibrant, scalable user bases for new deployments, whereas Arbitrum remains a strong DeFi hub but a thinner destination for experimental teams. That imbalance likely influences where early‑stage builders choose to launch and underscores why better user‑onboarding and throughput on Arbitrum could be crucial to attract small builders.

Devrel

Developer relations (DevRel) is the practice of building a two‑way relationship between a technology provider and the developers who use its products. Effective DevRel teams make it easier for developers to get started by providing clear documentation, helpful SDKs and sample projects. They also run hackathons, office hours and support channels where developers can ask questions and get quick answers. Good DevRel shortens the learning curve, encourages experimentation and fosters a sense of community around a platform. These efforts lead to wider adoption, more polished applications and stronger feedback loops between builders and product teams, ensuring that the platform evolves in ways that meet real‑world needs. Without active engagement, even technically sound protocols may struggle to attract and retain developers.

Developer relations are central to the success of blockchain networks. Base, Arbitrum, Optimism and Solana all recognise that attracting and supporting builders drives network adoption. Base leverages Coinbase’s reach and structured reward programmes to onboard developers; Arbitrum expands its tooling with Stylus and pairs grants with hands‑on incubator support; Optimism’s OP Stack and retroactive funding model incentivise both innovation and public‑goods projects; and Solana couples its high‑performance architecture with improved tooling, AI‑enhanced support and education programmes such as Solana U. While each network has a different philosophy and technical stack, they all invest heavily in documentation, SDKs, community engagement and financial incentives.

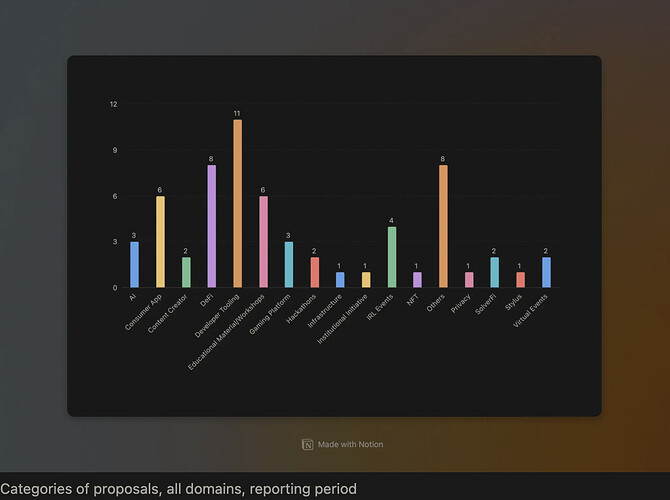

Builder Survey

The survey provides a quick empirical snapshot of how developers view Arbitrum and other ecosystems. To collect this pulse check, we circulated a 13‑question survey across our network and received 22 responses. The questions asked participants to identify their protocol’s stage (emerging, growing or established), indicate whether they currently build on Arbitrum, and choose which chain they would deploy a new project on if starting today. We also asked respondents to rank the importance of factors such as liquidity, business development support, technical fit, grants and community when deciding where to launch. This limited sample is not meant to be exhaustive but offers a directional sense of builder sentiment and priorities. It helps us gauge how developers weigh ecosystem features and what might incentivize them to choose Arbitrum over competing chains.

After analyzing the responses we came to the following conclusions:

-

Launch decisions are liquidity/BD-led. The survey mirrors our 6-month funnel: applicants who fail often do so on Feasibility/Milestones and Alignment, not because they don’t want funding. Builders go first where users, liquidity and BD surfaces are easiest to access (Base by a wide margin in this pulse).

-

Distribution beats grants. “Grant availability” ranked last of the five launch drivers. Respondents are more sensitive to distribution (co-marketing, portal placement) and co-incentives, exactly the levers they say would change their mind to build on Arbitrum.

-

Incubation helps at the margin. Interest in structured incubation is solid (mean 3.68/5). That aligns with our rejection stack: the top failures (Milestones/Feasibility, Sustainability) are exactly what a micro-experiments + light advisory lane can address quickly.

-

Awareness and fit gaps remain. A non-trivial slice hadn’t heard of the program, perceived Arbitrum primarily as a DeFi chain, or found the category fit unclear. This is consistent with the category skew we observe (DeFi/AI heavy; fewer “mindshare” verticals like payments or consumer social).

Lessons from the Hackathon Continuation Program

Additional research done by RNDAO revealed important insights regarding Arbitrum’s Hackathon Continuation Program, a pilot that aimed to nurture hackathon winners into viable ventures. Several limitations surfaced:

-

Talent attraction and community: The organisers reported that a very small marketing budget and the hackathon format attracted builders with low commitment. They also noted that Arbitrum is perceived to have less entrepreneurial “community” than Base or Solana.

-

Hackathons vs. venture building: Hackathon teams often lacked defined problems or validated markets; mentors found that the hackathon mindset is misaligned with customer‑centred venture development. The report recommended abandoning hackathon‑first sourcing and replacing it with research‑driven RFPs and opportunity briefs.

-

Funding and talent acquisition: To attract higher‑calibre founders, the programme called for larger initial investments ($100–150k) and follow‑on funding, plus more marketing and community‑building activities. It also advised shifting the focus from product hacks to business validation.

These lessons dovetail with our grant‑funnel diagnostics: most rejected NPAI applications fail on feasibility, sustainability or team validation rather than ideas.

Conclusion: Where the small-team experimentation is (and isn’t)

Our analysis reveals a consistent pattern: metrics of developer momentum (repos, full-time devs, verified contracts) and on‑chain usage (DAU/MAU, throughput, smart accounts) show Base and Solana, outpacing Arbitrum in attracting new builders and users. DevRel initiatives support this narrative: Coinbase‑backed marketing and reward programmes have created strong builder mindshare on Base, while Solana’s IBRL (increase bandwidth, reduce latency) narrative has attracted and built a large developer community. In contrast, Arbitrum’s current DevRel caters more to established DeFi/RWA players than to small, fast‑iterating teams, with even anecdotal stories of builders not able to find support in official channels.

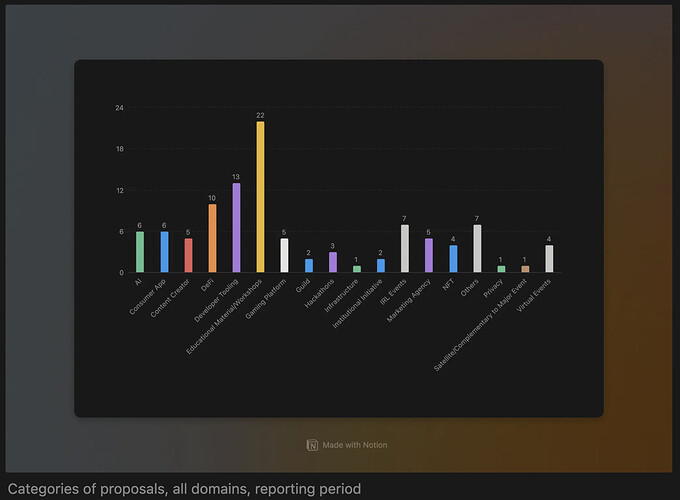

The survey results and hackathon report reinforce that builders prioritise liquidity, distribution and business‑development support over grant size, view Arbitrum primarily as a DeFi chain, and desire structured incubation to help them meet feasibility and sustainability requirements. Most rejected NPAI applications fail on these exact points, unclear milestones, weak feasibility (140 rejections), sustainability gaps (138) and lack of Arbitrum alignment (125) and novel ideas (119).

In practice, alignment and novelty frequently surface inside feasibility critiques; the teams applying usually don’t have a concrete delta or an Arbitrum-specific plan. This pattern matches a pipeline heavy on AI and DeFi and light on payments, decentralized social, and other “mindshare” categories, which are exactly the tracks where small teams on hot chains tend to run fast experiments. Additionally, the RNDAO pilot observed that limited marketing budgets and a hackathon‑first approach attracted low‑commitment teams and that Arbitrum is perceived to have a thinner entrepreneurial community than Base or Solana.

To conclude, it seems that Base’s metrics are growing and outpacing Arbitrum in nearly every facet, with visible spikes around programmatic initiatives (e.g., Onchain Summer). Qualitatively, recent “mindshare” initiatives (e.g., external ecosystem grants like Kalshi’s) have targeted Base and Solana rather than Arbitrum, reinforcing a perception that quick, low-friction experiments have better distribution elsewhere. Meanwhile, Arbitrum continues to land institutional-grade partners (e.g., major DeFi/RWA integrations), which strengthens the top of the stack but does not by itself generate a small-builder on-ramp.

Appendix Survey

(Base (10) > Ethereum (2) ≈ Solana (2) ≈ HyperEVM (2) > Arbitrum (1).)

Why would you choose this chain?

This refers to the option they choose above.

-

Liquidity and Distribution: High liquidity, better distribution, and a large number of DeFi users are key factors.

-

Ecosystem and Momentum: A vibrant ecosystem, momentum behind the chain, and a focus on DeFi are appealing.

-

Growth Opportunity: Chains with realistic growth opportunities, strong support, and emerging ecosystems with missing solutions are preferred.

-

Specific Chain Preferences: Mentions of Ethereum for serious DeFi apps, Base for consumer-focused apps due to its affiliation with Coinbase and institutional comfort with yield farming, and Solana for its active community despite concerns about Rust.

-

Other Factors: Exposure, capital, cheap fees, and being an EVM compatible chain are also considerations.

Have you considered applying to the Arbitrum DAO grant? If not, why?

-

Awareness and Information: Several respondents were unaware of the Arbitrum DAO grant program, suggesting a need for better communication and outreach.

-

Grant Size and Relevance: Many felt the grant size (e.g., $50k) was too small or not relevant for their projects, especially for growth-stage teams or those with higher cost opportunities.

-

Application Difficulty and Milestones: Some found the milestones or application process too challenging or not worth the effort for the grant amount.

-

Project Fit and Scope: Some believed the grant categories didn’t fit their dApp profile, or that the grant was better suited for smaller projects. There was a perception that Arbitrum is primarily a DeFi chain.

-

Past Interactions and Alternative Support: Some had received past support from the Arbitrum foundation or benefited from other initiatives like audit subsidies and co-marketing, while others considered alternative programs.

If other reasons are checked, please provide below.

-

Retail interest and ecosystem activation: One response mentioned the importance of amassing retail interest and ecosystem-wide activation.

-

Fairness and equal opportunity: A fair and equal playing field for all applying projects was highlighted as important.

-

Dedicated support and compensation: One respondent emphasized the need for dedicated liquidity and support from the network, and adequate compensation for teams that might need to put other projects on hold.

-

Exclusivity requirements: Hard requirements like exclusivity were noted as detrimental due to clashes with fiduciary responsibility.

What single change would most increase your likelihood of applying for an Arbitrum grant?

-

Increased Grant Size and Support: Many respondents indicated that a larger grant size (e.g., 150-200k) and direct participation from the DAO and Arbitrum protocols/partners in pushing the resulting projects would significantly increase their likelihood of applying. This includes providing marketing, VC introductions, and helping projects gain momentum.

-

Improved Process and Communication: Several responses highlighted the need for a single point of contact and easier milestone requirements. Streamlining the KYB/KYC process, which has faced technical issues and data breaches, was also a significant concern, with a desire for a one-time submission.

-

Alignment and Ecosystem Growth: Alignment on multi-chain deployment strategies was a key factor for some builders. There’s also a desire for a continuously running grant system based on performance, a growing DeFi ecosystem, and increased focus on specific DeFi verticals like options.

-

Commitment and Visibility: Respondents expressed a need for Arbitrum to demonstrate a stronger commitment to its grant program, ensuring it’s not perceived as a “side quest” and is better integrated with exchanges and retail distribution channels to put Arbitrum back in the spotlight.

PDF link: