This post marks the official start of the D.A.O. Grant Program Season 3!

The Arbitrum D.A.O. (Domain Allocator Offering) Grant Program is a 1 year program divided in five domains, aimed to be the entry point of grants in the Arbitrum ecosystem and support builders aligned with the vision of the DAO. As per the snapshot snapshot and tally votes, the program will support the following areas:

- New Protocols and Ideas: a general bucket encompassing protocols, platforms, governance tooling, and other projects that don’t specifically fall into other domains

- Education, Community Growth and Events: a domain focused on physical events and educational materials for Arbitrum

- Gaming: a domain dedicated to web3 gaming infrastructure, web3 KOL gaming activities, and all video gaming-related projects.

- Dev Tooling on One and Stylus: a technical domain oriented toward developer tooling and promoting Arbitrum One and Stylus adoption.

- Orbit Chains: a domain oriented toward the expantion of dApps into specific Orbit chains, deployment of technical solution aimed to address the current user experience fragmentation and in general bootstrapping of solution built on top of the 2024-2025 Offchain Labs roadmap

For further info on the five domains, check the related page in the information hub. Note that being the program modular in nature, the DAO might want to vote in future to add further domains to the current list.

Teams that are interested can apply here: https://arbitrum.questbook.app/

FAQ

How does the grant process work? Is there a maximum amount I can ask?

After creating a wallet in Questbook, you can submit a proposal in one of the five domains, following the appropriate template. In your proposal, you may request up to 25,000 USDC, which will be reviewed solely by the Domain Allocator managing that domain. If you request up to 50,000 USDC, your proposal will require review by a second Domain Allocator as well.

Once your proposal is submitted, you will receive a response directly in the comment section of your proposal.

For more details, please refer to the guide on how to apply and the FAQ.

Where can I find the RFP and the Rubric of each domain?

All information about the program, including the RFP for each domain, the rubrics, and the KPIs, can be found in the information hub we have created: https://arbitrumdaogrants.notion.site/. We followed a structure similar to the one used in the UAGP program and incorporated some content from it, as it proved to be highly effective. (Thanks @Areta, for the awesome work!)

What is the procedure to apply for a grant?

- Go to arbitrum.questbook.app

- Create a wallet

- Choose one of the domains and click “Submit New” in the top left corner.

- Complete the form, ensuring you answer every question.

For more information on how to create a wallet in Questbook, please check here.

For details on the application process, please refer to the “How to Apply” section.

Who is the team running the program?

You can find more info on the team here.

The program is lead by Jojo who is the program manager; the domains are managed by Castle Labs, SeedGov, MaxLomu, Flook and Juandi

I have other questions that were not covered here, where can i find the info?

We have created a dedicated FAQ section in the information hub. If you still have any questions, feel free to reach out to PM Jojo! You can find the contact details here.

How can the delegates track the progress of the program?

First and foremost, everyone is welcome to ask questions in this thread or contact the PM directly here or on Telegram.

The PM will also post a report in the forum on a monthly basis, mid-month, to highlight the current status of the program. Additionally, this information will be shared during GCR calls. All reports will be aggregated in the reports section of the information hub.

Both the written reports and verbal updates, as well as any oversight on the program, may be adjusted in the future to align with any new framework that is voted on and approved by the DAO.

Is the program going to change through the year?

The program has been approved to run for one year, until March 2026, or until the allocated funds are depleted.

Given the rapid evolution of the crypto space, we anticipate adapting the program and RFPs over time to align with the overarching goals set by the DAO, starting with the SOS proposals. This does not mean there won’t be room for innovation or experimentation. However, the entire team agrees that our primary objective is to support the DAO in achieving high-level goals that delegates, the Foundation, and OCL collectively recognize as valuable.

One change example has been the implementation of a more active role for Castle Labs to do BD for the “New Protocols and Ideas” domain, in reaction to the poor deal flow that lead to a lower approval rate in what is the generalistic domain of the grant and the subsequent enquiries made by some delegates.

Where can I find all the resources, info and links?

- The main information hub in notion will be constantly updated with new info, updated RFP, data and reports through the time

- This very thread will be the main communication channel with the DAO

- The Questbook Discord is the point of contact for grantees that have further question on the program and want to communicate in a more agile way

MONTHLY REPORTS

1st Report - 17th March 2025 to 17th April 2025

2nd Report - 18th April 2025 to 17th May 2025

3rd Report - 18th May 2025 to 14th June 2025

4th Report - 15th June 2025 to 15th July 2025

5th Report - 16th July 2025 to 17th August 2025

6th Report - 18th August 2025 to 19th September 2025 - Mid Term Report

7th Report - 20th September 2025 to 15th October 2025

The seventh monthly report is live in our website!

TLDR:

- referencing period: 20th of September to 15th of October

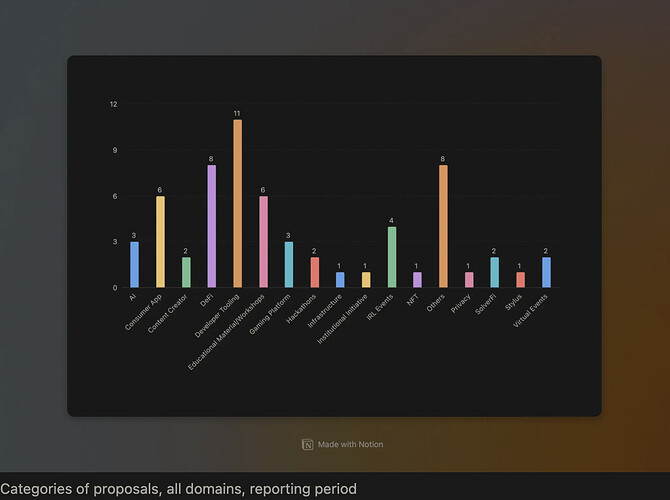

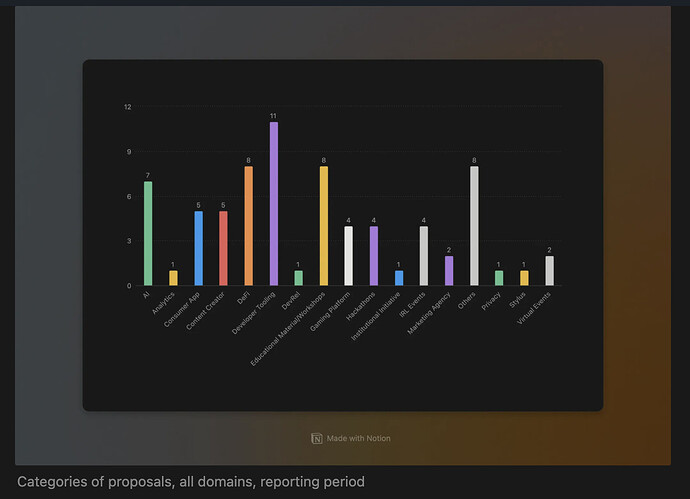

- 727 proposals in total, of which 99 were approved and 20 were completed

- for the second month in a row we are seeing several milestones being completed: 25% of the whole program in the last 30 days, signalling we are moving as expected in the more mature phase of the grant

- focus of the month of the team has managing the mid term report, the call with the DAO and the deliverables both from the whole team and for Castle for the research

- focus for the next month is toward preparing the post-grant interview for completed projects

8th Report - 16th October 2025 to 17th November 2025

9th Report - 18th November 2025 to 1st January 2026

SPECIAL REPORTS

- Mid term report: the D.A.O. Grant Team, after 6 months of running the program, has evaluated both the flow of proposals, the gaps and perks of the programs, the results so far achieved, and how to better integrate with the DAO. We do think this report is quite valuable, due to, among the others, the presence of most of the team in the DAO and in the Grant vertical for several years in Arbitrum. We have both a shortened version, as well as a long form one in a pdf format.

- Qualitative analysis of builders in Arbitrum and in other chains: in this report, Castle Lab has analysed as part of their BD mandate the status of builders in Arbitrum. While this report has a qualitative nature, the report analyses both onchain metrics and other dashboard publicly available to understand if there is a lack of small to mid size builders in our chain, which are the targets of the D.A.O. Grant Program. The report is available both in the forum as well as in a pdf format.

- Active BD report: in this report, Castle Lab analyses the results of the active BD phase undertaken following up the request of the DAO as highlighted in this specific September update.

BD activity report of Castle Labs for the Quarter in the New Protocols and Ideas domain

CALLS AND OTHER MATERIALS

- Recording of the first quarterly call alongside the companion deck

- Recording of the second quarterly call alongside the companion deck

- Companion deck of the third quarterly call (no recording available due to technical problems).