STIP Retroactive Analysis – Spot DEX TVL

The below research report is also available in document format here .

TL;DR

In H2 2023, Arbitrum launched the Short-Term Incentive Program (STIP) by distributing millions of ARB tokens to various protocols to drive user engagement. This report focuses on how the STIP impacted TVL in the spot DEX vertical, specifically examining the performance of Balancer, Camelot, Ramses, Trader Joe and WOOFi. By employing the Synthetic Control (SC) causal inference method to create a “synthetic” control group, we aimed to isolate the STIP’s effect from broader market trends. For each protocol the analysis focuses on the median TVL in the period from the first day of the STIP to two weeks after the STIP ended, in an effort to include at least two weeks of persistence in the analysis.

Our analysis yielded varied results: WOOFi saw a significant 62.5% of its median TVL in the analyzed period attributed to STIP, while Camelot saw 37.1%. Balancer and Trader Joe also benefited, both with around 12% of their TVL linked to STIP incentives. During the STIP period and the two weeks following its conclusion, the added TVL per dollar spent on incentives was approximately $12 for both Balancer and Camelot, $7 for WOOFi ($25 when considering only the incentives given directly to LPs), and $2 for Trader Joe. Our model showed no statistically significant impact for Ramses.

Spot DEXs on Arbitrum focus on enhancing liquidity for native and multi-chain projects, helping them bootstrap and build liquidity sustainably. Protocols achieve this through liquidity incentives, using either activity-based formulas or more traditional methods for allocation. Our analysis showed that different incentive distribution methods had a similar impact on TVL across protocols like Balancer and Camelot. However, Trader Joe’s strategy was less effective due to shorter incentivization periods, as also identified by the team.

WOOFi’s results varied depending on whether we considered total incentives or those just for liquidity providers. While it underperformed in added TVL per dollar spent compared to Camelot and Balancer, it excelled in liquidity-specific incentives. Additionally, incentives for other activities like swaps may indirectly boost TVL. Note that a price manipulation attack on March 5th could have impacted WOOFi’s confidence.

Ramses showed a substantial increase in TVL following the STIP, suggesting a possible delayed positive impact despite a lack of immediate statistical significance.

Our methodology and detailed results underscore the complexities of measuring such interventions in a volatile market, stressing the importance of comparative analysis to understand the true impact of incentive programs like STIP.

Context and Goals

In H2 2023, Arbitrum initiated a significant undertaking by distributing millions of ARB tokens to protocols as part of the Short-Term Incentive Program (STIP), aiming to spur user engagement. This program allocated varying amounts to diverse protocols across different verticals. Our objective is to gauge the efficacy of these recipient protocols in leveraging their STIP allocations to boost the usage of their products. The challenge lies in accurately gauging the impact of the STIP amidst a backdrop of various factors, including broader market conditions.

This report pertains to the spot DEX vertical in particular. In this vertical, the STIP recipients were Balancer, Camelot, Ramses, Trader Joe and WOOFi. The following table summarizes the amount of ARB tokens received and when they were used by each protocol.

To assess the impact of the STIP on spot DEX protocols, TVL is a crucial metric. While trading volume is also important for evaluating a DEX’s performance, the primary focus of these AMMs within the STIP was on enhancing and sustaining liquidity for both new and established projects in the ecosystem. The goal was to ensure that liquidity was readily available to improve efficiency and reduce slippage. Most of these projects used all the incentives allocated to them to attract liquidity providers, making TVL the most directly impacted metric. However, a separate analysis would be valuable to understand how this increase in TVL translated into further activities, such as trading volume on the DEX, for a more comprehensive understanding. Throughout the report, the 7-day moving average (MA) TVL was used, so any mention of TVL should be understood as the 7-day MA TVL.

We used a Causal Inference method called Synthetic Control (SC) to analyze our data. This technique helps us understand the effects of a specific event by comparing our variable of interest to a “synthetic” control group. Here’s a short breakdown:

- Purpose: SC estimates the impact of a particular event or intervention using data over time.

- How It Works: It creates a fake control group by combining data from similar but unaffected groups. This synthetic group mirrors the affected group before the event.

- Why It Matters: By comparing the real outcomes with this synthetic control, we can see the isolated effect of the event.

In our analysis, we use data from other protocols to account for market trends. This way, we can better understand how protocols react to changes, like the implementation of the STIP, by comparing their performance against these market-influenced synthetic controls. The results pertain to the period from the start of each protocol’s use of the STIP until two weeks after the STIP had ended.

Results

Balancer

Balancer launched its v1 in early 2020, being in Arbitrum since Q3 2021. Balancer’s KPIs included TVL, daily protocol fees and volume, all of which have increased during the STIP. The totality of the received funds were allocated to liquidity providers through an incentive system developed for the STIP. The grant aimed to boost economic activity on Arbitrum by creating an autonomous mechanism for distributing ARB incentives to enhance Balancer liquidity across the network. This incentive program distributed 41,142.65 ARB per week based on veBAL voting for pools on Arbitrum. The vote weight per pool was multiplied by a boost factor, and ARB was then distributed to all pools based on their relative boosted weight. Pools were capped at 10% of the total weekly ARB, except for ETH-based LSD stableswap pools, which were capped at 20%.

Balancer’s TVL increased from approximately $111M on November 2 2023, when the STIP started, to a peak of $193M in March 2024. By the end of the STIP on March 22, the TVL was at $156M. One week later, on March 29, the TVL had decreased to $145M. Two weeks later, on April 5, it was $135M, and by May 5, it had further dropped to $90M.

Overall, there was a 41% increase in TVL when comparing the periods before and after the STIP. Comparing the start of the STIP to one week after its end, there was a 31% increase, and a 21% increase two weeks after the STIP ended.

The first chart below compares Balancer’s TVL with the modeled synthetic control. The second chart highlights the impact of the STIP by showing the difference between Balancer’s TVL and the synthetic control. For more details, see the Methodology section.

The median impact of the STIP on Balancer’s TVL, from its start on November 2, 2023, to its end on March 22, 2024, was $17.3M. Including the TVL two weeks after the STIP concluded, the median impact was $17.6M. Balancer received a total of 1.2M ARB, valued at approximately $1.44M (at $1.20 per ARB). This indicates that the STIP generated an average of $12.27 in TVL per dollar spent during its duration and the following two weeks. These results were gathered with 90% statistical significance.

Camelot

Camelot’s KPIs for the STIP included TVL, volume, and fees. Their incentive allocation strategy prioritized LP returns by incentivizing more than 75 different pools from various Arbitrum protocols. The team focused on promoting liquidity in a diverse mix of pools, including Arbitrum OGs, smaller protocols, newcomers, and established projects from other ecosystems. The ARB distribution followed the same logic used for their own GRAIL emissions, aimed at ensuring a consistent and strategic approach to incentivization.

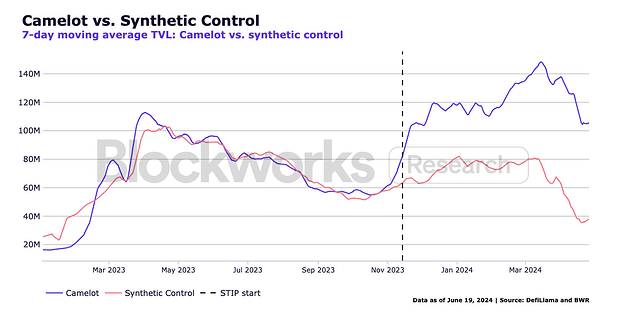

Camelot’s TVL increased from approximately $82M on November 14 2024, when the STIP began, to a peak of $150M in March 2024. By the end of the STIP on March 29, the TVL was $136M. One week later, on April 5, it was $131.6M. Two weeks after the STIP ended, on April 12, the TVL stood at $126M, and one month later, it was $105.5M.

Overall, there was a 66% increase in TVL comparing the periods before and after the STIP. Comparing the start of the STIP to one week after its end, there was a 60% increase, and a 53% increase two weeks after the STIP concluded.

The median impact of the STIP on Camelot’s TVL from its start on November 14, 2023, to its end on March 29, 2024, was $42.7M. When including the TVL two weeks after the STIP ended, the impact increased to $44M. Camelot received a total of 3.09M ARB, of which 65,450 ARB were returned, resulting in a final total of 3,024,550 ARB, valued at approximately $3.63M (at $1.20 per ARB). This indicates that the STIP generated an average of $12.12 in TVL per dollar spent during the STIP and the two weeks following it. These results were obtained with 95% statistical significance.

Ramses

Ramses distributed its total incentives to liquidity providers across various pools using a two-part strategy. Fifty percent of the incentives were allocated based on fees generated in the previous epoch, while the remaining fifty percent were distributed at the team’s discretion, targeting protocols that needed bootstrapping and lacked sufficient liquidity support from other sources. This approach aimed to strengthen the overall Arbitrum ecosystem by balancing support for established and emerging projects.

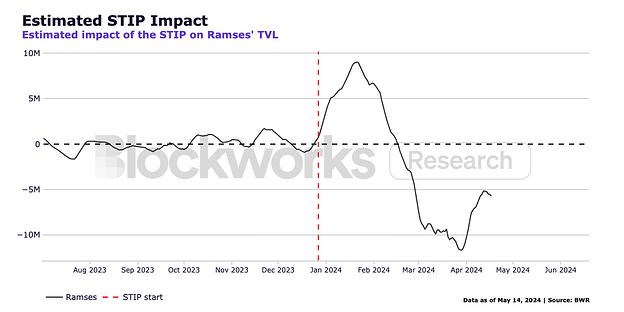

Ramses’ TVL increased from approximately $8.2M on December 27, 2023, when the STIP began, to over $25M at its peak in June 2024. When the STIP ended on March 20, 2024, the TVL was $10.7M. One week later, on March 27, the TVL had dropped slightly to $8.1M. However, two weeks after the STIP concluded, on April 3, the TVL had risen to $10.9M, and one month later, on April 20, it reached $12.5M. This represents a 30% increase in TVL from the period before the STIP to the period after it. Comparing the start of the STIP with the TVL one week after its end shows virtually no change, while there was a 33% increase two weeks after the STIP ended.

The STIP’s impact on Ramses was not statistically significant, which means we cannot conclude that the observed results were caused by the STIP rather than occurring by chance. As a result, no clear conclusions about the magnitude of the STIP’s effect on Ramses can be drawn with this analysis.

Trader Joe

In the STIP Addendum, Trader Joe explained that while the main goal of the grant was to incentivize long-tail assets (builders) within the Arbitrum ecosystem, it quickly became a cat-and-mouse game due to intense yield competition and high demand for liquidity. The protocol found that spreading efforts across a wide range of protocols and using a rotating incentive program with concentrated rewards over short periods was not the most effective approach for allocating incentives.

Trader Joe’s TVL increased from approximately $27.5M on November 4, 2023, when the STIP started, to nearly $50M at its peak in March 2024. By the end of the STIP on March 29, 2024, TVL had reached $42.4M. One week later, on April 5, TVL was $41.4M, and two weeks after the STIP ended, on April 12, it had decreased to $37.5M. One month after the STIP concluded, on April 29, the TVL was $28.3M. This represents a 54% increase in TVL from the period before the STIP to the period after it. Compared to the start of the STIP, TVL showed a 51% increase one week after the STIP ended and a 37% increase two weeks after the STIP concluded.

The median impact of the STIP on Trader Joe’s TVL from the start date on November 4, 2023, to the end date on March 29, 2024, was $3.7M. When also accounting for the TVL two weeks after the STIP ended, the total impact increased to $3.9M. Trader Joe received a total of 1.51M ARB, which was valued at approximately $1.81M (based on a $1.20 per ARB rate). This implies that the STIP generated an average of $2.31 in TVL for every dollar spent on incentives during the STIP period and the subsequent two weeks. These results are significant at an 85% confidence level.

WOOFi

WOOFi is the DeFi arm of the WOO ecosystem, functioning as a DEX that bridges the liquidity of the WOO X centralized exchange on-chain. Unlike the other protocols in this analysis, WOOFi did not allocate the total received ARB directly to liquidity incentives. WOOFi’s KPIs focused on several metrics: WOOFi Earn TVL, WOOFi Stake TVL, monthly swap volume, monthly perps volume, and the number of Arbitrum inbound cross-chain swaps. According to the Grant Information here and the STIP Addendum here, the ARB allocation was divided as follows: 30% to WOOFi Earn, 20% to WOOFi Pro, 15% to Arbitrum-inbound cross-chain swaps, 15% to WOOFi Stake, 10% to WOOFi Swap, and 10% to Quests & Cross-Protocol Integration. Additionally, approximately 65k ARB remained unused and was returned to the DAO.

To ensure consistency with the other analyzed protocols, this report focuses exclusively on WOOFi Earn’s TVL. Therefore, whenever WOOFi’s TVL is mentioned, it specifically refers to WOOFi Earn’s TVL. It is relevant to note that WOOFi Earn functions primarily as a yield aggregator product. While analyzing WOOFi Earn in isolation might also warrant a comparison to other yield aggregator protocols rather than spot DEXs, the core of WOOFi’s business is its spot DEX. The Earn feature was designed to support liquidity for on-chain swaps, which is why it has been included in this analysis.

WOOFi’s TVL grew from approximately $4.5M on December 26, 2023, when the STIP began, to around $15.4M at its peak in February 2024. By the end of the STIP on March 29, 2024, the TVL was $12.6M. One week later, on April 5, the TVL was $12.3M, and two weeks after the STIP ended, on April 12, it dropped to $9.5M. By one month after the STIP concluded, on April 29, the TVL had decreased to $5.9M.

This represents a 181% increase in TVL from before the STIP to after it. Comparing the start of the STIP to the TVL one week after its end shows a 175% increase, while two weeks after the STIP ended, the increase was 111%.

The median impact of the STIP on WOOFi’s TVL from its start on December 26, 2023, to its end on March 29, 2024, was $8.8M. When accounting for the TVL two weeks after the STIP concluded, the total median impact was $8.3M. WOOFi received a total of 1M ARB, of which approximately 65,000 ARB remained unused, resulting in 935,000 ARB valued at about $1.12M (at $1.20 per ARB). This means the STIP generated an average of $7.4 in TVL for every dollar spent on ARB incentives during the program and the following month. These results were gathered with 90% statistical significance.

Main Takeaways

Our analysis produced interesting results for the impact of the STIP on Balancer, Camelot, Trader Joe and WOOFi. The analysis deemed the impact on Ramses to not be statistically significant, which means that we couldn’t confidently say that STIP caused a noticeable change in the protocol’s TVL, but rather the variations we see could potentially just be due to market behavior. A further explanation of how this and all results were derived can be found in the Methodology section.

Spot DEXs serve a fundamentally different purpose than other protocols such as perp DEXs, where successful incentive campaigns primarily focus on boosting trading volume and fees. For a spot DEX on Arbitrum, the goal is to enhance and support the liquidity of both native Arbitrum projects and multi-chain projects aligned with Arbitrum by increasing liquidity for their tokens. This approach helps new, growing, and established projects on Arbitrum bootstrap and build liquidity in a sustainable and capital-efficient manner.

The protocols we analyzed aimed to achieve this goal mainly by offering liquidity incentives to their providers. Some protocols developed activity-based formulas to determine a relative allocation between pools, while others employed more traditional methods, such as evaluating allocations on a weekly or biweekly basis and fixing them for the next period.

Our analysis showed that different methods of distributing incentives across various pools did not seem to substantially impact the effectiveness of the STIP. The ability to generate TVL appeared similar across protocols. For instance, both Balancer and Camelot demonstrated comparable added TVL per dollar of ARB spent. However, Trader Joe’s strategy was deemed less effective due to the short duration of incentivization in specific pools, as also identified by the team. Protocols can learn from this experience when designing future incentive programs.

WOOFi presents a different case with results that vary substantially depending on whether we consider the total incentives or just those allocated directly to WOOFi’s liquidity providers. Compared to Camelot and Balancer, WOOFi underperforms in terms of added TVL per dollar spent on incentives. However, it excels when focusing solely on liquidity incentives. This disparity makes direct comparisons challenging, but it suggests that incentives for other activities on the platform may indirectly boost TVL and could be beneficial. For example, offering incentives for swaps can increase trading volume, which in turn raises yields in those pools and attracts more TVL. It’s also worth noting that on March 5th, WOOFi experienced a price manipulation attack resulting in an $8.75M loss from its synthetic proactive market making (sPMM). Although WOOFi Earn was not directly affected, this incident likely shook user confidence in the protocol for a period following the attack.

The exact impact on Ramses couldn’t be assessed due to a lack of statistical significance. However, it’s noteworthy that TVL increased substantially in the months following the STIP. This may suggest that, while the immediate impact of the STIP couldn’t be determined during the analysis period, it may have had a delayed positive effect.

Lastly, it’s essential to acknowledge the limitations inherent in our models, which are only as reliable as the data available. Numerous factors can drastically influence outcomes, making it challenging to isolate the effects of a single intervention. This is particularly true and disproportionate in the crypto industry.

Given these complexities, our results should be interpreted comparatively rather than absolutely. The SC methodology was uniformly applied across all protocols, allowing us to gauge the relative efficacy of the STIP allocation.

Appendix

Methodology

TLDR: We employed an analytical approach known as the Synthetic Control (SC) method. The SC method is a statistical technique utilized to estimate causal effects resulting from binary treatments within observational panel (longitudinal) data. Regarded as a groundbreaking innovation in policy evaluation, this method has garnered significant attention in multiple fields. At its core, the SC method creates an artificial control group by aggregating untreated units in a manner that replicates the characteristics of the treated units before the intervention (treatment). This synthetic control serves as the counterfactual for a treatment unit, with the treatment effect estimate being the disparity between the observed outcome in the post-treatment period and that of the synthetic control. In the context of our analysis, this model incorporates market dynamics by leveraging data from other protocols (untreated units). Thus, changes in market conditions are expected to manifest in the metrics of other protocols, thereby inherently accounting for these external trends and allowing us to explore whether the reactions of the protocols in the analysis differ post-STIP implementation.

To achieve the described goals, we turned to causal inference. Knowing that “association is not causation”, the study of causal inference lies in techniques that try to figure out how to make association be causation. The classic notation of causality analysis revolves around a certain treatment ![]() , which doesn’t need to be related to the medical field, but rather is a generalized term used to denote an intervention for which we want to study the effect. We typically consider

, which doesn’t need to be related to the medical field, but rather is a generalized term used to denote an intervention for which we want to study the effect. We typically consider ![]() the treatment intake for unit i, which is 1 if unit i received the treatment and 0 otherwise. Typically there is an

the treatment intake for unit i, which is 1 if unit i received the treatment and 0 otherwise. Typically there is an ![]() , the observed outcome variable for unit i. This is our variable of interest, i.e., we want to understand what the influence of the treatment on this outcome was. The fundamental problem of causal inference is that one can never observe the same unit with and without treatment, so we express this in terms of potential outcomes. We are interested in what would have happened in the case some treatment was taken. It is common to call the potential outcome that happened the factual, and the one that didn’t happen, the counterfactual. We will use the following notation:

, the observed outcome variable for unit i. This is our variable of interest, i.e., we want to understand what the influence of the treatment on this outcome was. The fundamental problem of causal inference is that one can never observe the same unit with and without treatment, so we express this in terms of potential outcomes. We are interested in what would have happened in the case some treatment was taken. It is common to call the potential outcome that happened the factual, and the one that didn’t happen, the counterfactual. We will use the following notation:

![]() - the potential outcome for unit i without treatment

- the potential outcome for unit i without treatment

![]() - the potential outcome for the same unit i with the treatment.

- the potential outcome for the same unit i with the treatment.

With these potential outcomes, we define the individual treatment effect to be ![]() . Because of the fundamental problem of causal inference, we will actually never know the individual treatment effect because only one of the potential outcomes is observed.

. Because of the fundamental problem of causal inference, we will actually never know the individual treatment effect because only one of the potential outcomes is observed.

One technique used to tackle this is Difference-in-Difference (or diff-in-diff). It is commonly used to analyze the effect of macro interventions such as the effect of immigration on unemployment, the effect of law changes in crime rates, but also the impact of marketing campaigns on user engagement. There is always a period before and after the intervention and the goal is to extract the impact of the intervention from a general trend. Let ![]() be the potential outcome for treatment D on period T (0 for pre-intervention and 1 for post-intervention). Ideally, we would have the ability to observe the counterfactual and estimate the effect of an intervention as:

be the potential outcome for treatment D on period T (0 for pre-intervention and 1 for post-intervention). Ideally, we would have the ability to observe the counterfactual and estimate the effect of an intervention as: ![]() #0), the causal effect being the outcome in the period post-intervention in the case of a treatment minus the outcome in the same period in the case of no treatment. Naturally,

#0), the causal effect being the outcome in the period post-intervention in the case of a treatment minus the outcome in the same period in the case of no treatment. Naturally, ![]() is counterfactual so it can’t be measured. If we take a before and after comparison,

is counterfactual so it can’t be measured. If we take a before and after comparison, ![]() -E%5BY(0)%7CD%3D1)#0) we can’t really say anything about the effect of the intervention because there could be other external trends affecting that outcome.

-E%5BY(0)%7CD%3D1)#0) we can’t really say anything about the effect of the intervention because there could be other external trends affecting that outcome.

The idea of diff-in-diff is to compare the treated group with an untreated group that didn’t get the intervention by replacing the missing counterfactual as such: ![]() . We take the treated unit before the intervention and add a trend component to it, which is estimated using the control

. We take the treated unit before the intervention and add a trend component to it, which is estimated using the control ![]() . We are basically saying that the treated unit after the intervention, had it not been treated, would look like the treated unit before the treatment plus a growth factor that is the same as the growth of the control.

. We are basically saying that the treated unit after the intervention, had it not been treated, would look like the treated unit before the treatment plus a growth factor that is the same as the growth of the control.

An important thing to note here is that this method assumes that the trends in the treatment and control are the same. If the growth trend from the treated unit is different from the trend of the control unit, diff-in-diff will be biased. So, instead of trying to find a single untreated unit that is very similar to the treated, we can forge our own as a combination of multiple untreated units, creating a synthetic control.

That is the intuitive idea behind using synthetic control for causal inference. Assuming we have ![]() units and unit 1 is affected by an intervention. Units

units and unit 1 is affected by an intervention. Units ![]() are a collection of untreated units, that we will refer to as the “donor pool”. Our data spans T time periods, with

are a collection of untreated units, that we will refer to as the “donor pool”. Our data spans T time periods, with ![]() periods before the intervention. For each unit j and each time t, we observe the outcome

periods before the intervention. For each unit j and each time t, we observe the outcome ![]() . We define

. We define ![]() as the potential outcome without intervention and

as the potential outcome without intervention and ![]() the potential outcome with intervention. Then, the effect for the treated unit

the potential outcome with intervention. Then, the effect for the treated unit ![]() at time t, for

at time t, for ![]() is defined as

is defined as ![]() . Here

. Here ![]() is factual but

is factual but ![]() is not. The challenge lies in estimating

is not. The challenge lies in estimating ![]() .

.

Source: 15 - Synthetic Control — Causal Inference for the Brave and True

Since the treatment effect is defined for each period, it doesn’t need to be instantaneous, it can accumulate or dissipate. The problem of estimating the treatment effect boils down to the problem of estimating what would have happened to the outcome of the treated unit, had it not been treated.

The most straightforward approach is to consider that a combination of units in the donor pool may approximate the characteristics of the treated unit better than any untreated unit alone. So we define the synthetic control as a weighted average of the units in the control pool. Given the weights ![]() the synthetic control estimate of

the synthetic control estimate of ![]() is

is ![]() .

.

We can estimate the optimal weights with OLS like in any typical linear regression. We can minimize the square distance between the weighted average of the units in the donor pool and the treated unit for the pre-intervention period. Hence, creating a “fake” unit that resembles the treated unit before the intervention, so we can see how it would behave in the post-intervention period.

In the context of our analysis, this means that we can include all other spot DEX protocols that did not receive the STIP in our donor pool and estimate a “fake”, synthetic, control spot DEX protocol that follows the trend of any particular one we want to study in the period before receiving the STIP. As mentioned before, the metric of interest chosen for this analysis was TVL and, in particular, we calculated the 7-day moving average to smooth the data. Then we can compare the behavior of our synthetic control with the factual and estimate the impact of the STIP by taking the difference. We are essentially comparing what would have happened, had the protocol not received the STIP with what actually happened.

However, sometimes regression leads to extrapolation, i.e., values that are outside of the range of our initial data and can possibly not make sense in our context. This happened when estimating our synthetic control, so we constrained the model to do only interpolation. This means we restrict the weights to be positive and sum up to one so that the synthetic control is a convex combination of the units in the donor pool. Hence, the treated unit is projected in the convex hull defined by the untreated unit. This means that there probably won’t be a perfect match of the treated unit in the pre-intervention period and that it can be sparse, as the wall of the convex hull will sometimes be defined only by a few units. This works well because we don’t want to overfit the data. It is understood that we will never be able to know with certainty what would have happened without the intervention, just that under the assumptions we can make statistical conclusions.

Formalizing interpolation, the synthetic control is still defined in the same way by ![]() . But now we use the weights

. But now we use the weights ![]() that minimize the square distance between the weighted average of the units in the donor pool and the treated unit for the pre-intervention period

that minimize the square distance between the weighted average of the units in the donor pool and the treated unit for the pre-intervention period ![]() , subject to the restriction that

, subject to the restriction that ![]() are positive and sum to one.

are positive and sum to one.

We get the optimal weights using quadratic programming optimization with the described constraints on the pre-STIP period and then use these weights to calculate the synthetic control for the total duration of time we are interested in. We initialized the optimization for each analysis with different starting weight vectors to avoid introducing bias in the model and getting stuck in local minima. We selected the one that minimized the square difference in the pre-intervention period.

As an example, below is the resulting chart for Camelot, showing the factual TVL observed in Camelot and the synthetic control.

With the synthetic control, we can then estimate the effect of the STIP as the gap between the factual protocol TVL and the synthetic control, ![]() .

.

To understand whether the result is statistically significant and not just a possible result we got due to randomness, we use the idea of Fisher’s Exact Test. We permute the treated and control units exhaustively by, for each unit, pretending it is the treated one while the others are the control. We create one synthetic control and effect estimates for each protocol, pretending that the STIP was given to another protocol to calculate the estimated impact for this treatment that didn’t happen. If the impact in the protocol of interest is sufficiently larger when compared to the other fake treatments (“placebos”), we can say our result is statistically significant and there is indeed an observable impact of the STIP on the protocol’s TVL. The idea is that if there was no STIP in the other protocols and we used the same model to pretend that there was, we wouldn’t see any impact.

References

“Hernán MA, Robins JM (2020). Causal Inference: What If. Boca Raton: Chapman & Hall/CRC.”

Aayush Agrawal - Causal inference with Synthetic Control using Python and SparseSC

01 - Introduction To Causality — Causal Inference for the Brave and True