LTIPP Analysis

The below research report is also available in document format here.

The Arbitrum DAO established a Long Term Incentives Pilot Program (LTIPP) to test and ultimately develop an incentive framework. The program allocated roughly 30.65M ARB to protocols across the Arbitrum ecosystem, excluding previous STIP recipients. To date, a total of approximately 22.87M ARB has been distributed. Over 12 weeks, protocols distributed incentives to drive activity and growth. Part of the motivation was to assist protocols that did not receive STIP Round 1 incentives or completely missed the program. The outline for LTIPP can be found here.

Incentives are an important feature of growing ecosystems, fostering economic activity, and bootstrapping mindshare. Done correctly, incentives can be a powerful mechanism for driving adoption and alignment. Done incorrectly, incentives can be a waste of time and resources.

This retroactive analysis will provide a brief assessment of LTIPP. We collect data available here and here and review bi-weekly updates to analyze recipients, their strategies, and the impact of the incentives on high level growth metrics. In particular, we want to highlight outperformers and underperformers, and glean any best practices or lessons learned for protocols distributing ARB incentives in the future. This work is intended to be supplemental to already funded work (here and here). The overarching goal is to synthesize lessons learned that the DAO can reference as it begins thinking about future incentives programs–namely, the working group for incentives that is being actively discussed–especially as Timeboost introduces new conditions for trading and economic activity.

Summary

We attempted to evaluate incentives strategy for protocols in the Lending, DEX, and Perps categories by analyzing key protocol level metrics. We derived a metric for each category and measured its growth between before and during LTIPP to evaluate the effectiveness of each protocol’s incentive strategy. We then overlaid qualitative observations from applications and biweeklies. Below is a list of takeaways that the DAO can keep in mind for future incentives programs.

- LTIPP had a significant impact on DEX and Lending activity.

- LTIPP curbed a downward spiral in sequencer revenues.

- Protocols that formed partnerships during LTIPP generally saw better results.

- Effective marketing, frequent communication, and responsiveness to user feedback are key.

- For the DEX category, protocols that enhance LP experience (i.e. increase LP returns) outperformed.

- LTIPP did not have an impact on Arbitrum’s perps ecosystem, more broadly, and based on protocol specific results, incentives were largely unsustainable.

- Biweeklies suggest allocating more incentives to LPs rather than relying on referrals is a more effective strategy.

- Complex incentives that involve purchasing vesting ARB can result in additional friction and hurt results.

Results

Below is a table that shows the impact of incentives on protocol level metrics and global metrics. We also measured the increase in each metric per dollar spent on the category. We find that, for lending, DEXs, and perps, TVL market share grew by 25%, 17%, and -5% respectively. We find that volume market share grew by 7%, 21%, and -11.38%, respectively. And finally, sequencer revenue market share grew by 6.54%. As one can see, while incentives have a positive impact on DEX, lending, and sequencer revenues, they do not have a positive impact on perps.

Market Share Growth

Lending

Arbitrum’s market share in lending deposits amongst all chains has been fairly consistent since July 2023, and LTIPP has been able to further boost deposits. In fact, there was a significant median change in market share to 3.02% during LTIPP from 2.63% in the month before the LTIPP, effectively a 15% increase. LTIPP is estimated to have provided an additional TVL normalized by market share of $130M. This makes the dollar cost of LTIPP at $30.92 added TVL (normalized by market share) per dollar spent. It is relevant to add that part of this increase started a few days before the LTIPP, so other factors can be introducing bias in the analysis; however, this growth was notably sustained throughout the duration of LTIPP.

Arbitrum’s market share in borrow TVL was consistently larger than its market share in lending TVL which may have indicated that users in Arbitrum were more prone to participate more actively in lending and borrowing as opposed to only lending idle capital. Utilization had been slightly decreasing until the LTIPP, after which it seems to have stabilized. Borrow market share grew 7% during the LTIPP, from 2.65% to 2.84%. The LTIPP is estimated to have provided an added borrow TVL normalized by market share of $51M. This makes the dollar cost of LTIPP at $12.08 added borrows (normalized by market share) per dollar spent.

Amongst rollups, it is relevant to note that Arbitrum’s lending market share has been drastically decreasing since the end of 2023. While this could be a result of a number of factors, such as poor ARB market conditions, LTIPP has not been able to stop this trend, although it has perhaps slowed or stagnated it. There was a significant decrease of 2.62% in median TVL market share when comparing the period before to that during the LTIPP, 40.33% to 37.71%.

Borrow market share, however, had been trending similarly to deposit market share until March 2024 when it experienced a decrease to lower levels. Activity in this section has been picking up before and during the LTIPP, with a significant increase. In fact, rollups as a group have been increasing market share steadily since the start of 2023, and this has accelerated throughout 2024, which may explain why Arbitrum’s market share in global lending markets has slightly increased even if it has decreased amongst rollups.

DEXs

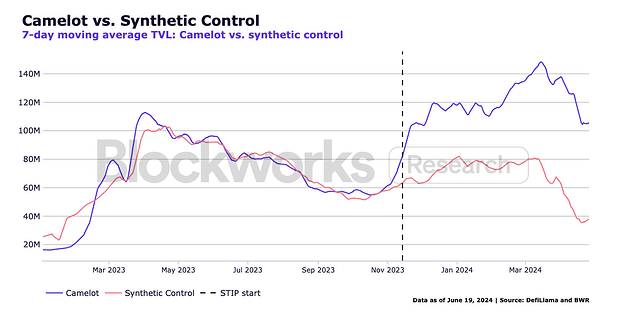

There was a significant increase in DEX TVL market share during LTIPP, both when comparing Arbitrum amongst all chains and amongst rollups. However, it is noticeable that Arbitrum’s market share amongst rollups has been drastically declining on a year-over-year basis. This is somewhat expected due to the growing competition in this sector, but given the degree, it is nonetheless worth raising this flag. LTIPP seems to have been instrumental in curbing this decrease and shifting the trend upwards, perhaps suggesting incentivizing DEX activity is a more positive return on investment. Arbitrum’s global DEX TVL market share grew from 3.28% to 3.84% during the program, a significant increase of 17%.

This reflects $102.6M of added TVL normalized by market share during this period, totalling $38.64 of TVL added per dollar spent on incentives. This calculation considers the 2,300,000 ARB spent amongst participants in this vertical, which at $1.13 per ARB (price at June 2nd) totals $2,599,000. The analysis uses data until September 24th (the last date of distribution for most protocols) even though some protocols have leftover ARB and have applied for LTIPP extensions.

One interesting aspect to note when looking at Arbitrum’s TVL and volume market share is that volume market share tends to be significantly larger than TVL market share. Considering first Arbitrum’s global DEX market share, there’s a sharp contrast between its median 3.84% market share in global TVL with its median 10.86% market share in global volume, during the period of the program. Amongst rollups, Arbitrum’s DEX TVL represents 26.2% of all rollups while its volume is 44.29% of all rollups. This can indicate that Arbitrum DEXs are operating in a relatively more efficient capacity than most other chains, likely a positive byproduct of 250ms blocktimes. A balance between these two metrics could indicate healthy activity, when capital deposited in DEXs is also actively traded.

Fluctuations in volume market share have increased during LTIPP, but there is still a significant increase noticeable when compared to the 30 days before the program. Global DEX volume market share belonging to Arbitrum has increased significantly from 8.99% to 10.86% (a 21% increase).

Perps

Arbitrum’s market share in the global perps market also does not seem to be affected by LTIPP, as can be seen by the continuing of its slow decrease, both in TVL and daily volume. In fact, there was a significant decrease in TVL market share when comparing the periods before to during the LTIPP, from 21.53% to 20.44%, perhaps suggesting incentivizing perps activity is more challenging or less effective (especially in comparison to DEX activity). This difference of 1.09% less market share is a 5% decrease from the period pre-LTIPP.

The median daily volume market share before the LTIPP was around 19.91%, and it decreased to 17.64% during the program. This 2.27% difference is a significant decrease, representing an 11.38% decrease from previous values.

Amongst other rollups, however, it seems like the LTIPP might have been able to slightly increase or at least stop the decrease of Arbitrum’s market share, which might indicate that rollup users are more influenced by incentives and more likely to switch over when enough benefits are present. In fact, Arbitrum’s perps volume market share amongst rollups decreased only by 1.48% and the corresponding TVL increased by 4% in the same period, compared to an 11.38% and 5% decrease, respectively, in market share amongst all chains.

Sequencer Revenue

Arbitrum’s sequencer revenue relative to other main L2s has been sharply decreasing all throughout 2023 and 2024. However, it appears Arbitrum’s incentive programs have had a noticeable impact on this metric - the decrease was curbed in November when STIP started, and there was even a sustained increase throughout its duration. After STIP, in March 2024, the decline continued and was again curbed by LTIPP in June 2024. Arbitrum’s market share of total L2 revenue in the month before the LTIPP was 6.04% and has grown to 12.58% in the months during the program. The total revenue for Arbitrum during this period, from June 2nd to September 24th was $4,678,700, an increase of $2,431,912 in added revenue. A total of 30.65M ARB ARB was allocated to the program, and with an ARB price of $1.13 at the start, the LTIPP’s dollar cost equates to $0.07 in added sequencer revenue per dollar spent on incentives.

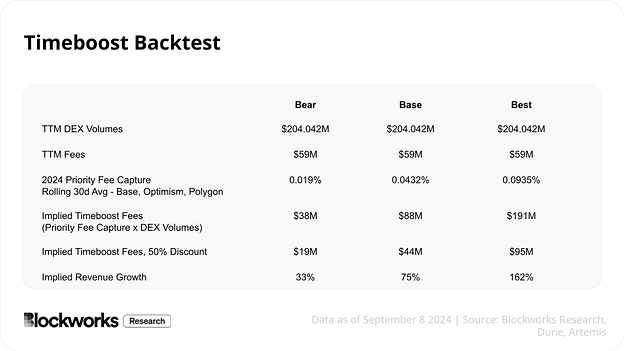

Notably, Arbitrum deploys a first-come-first-serve (FCFS) sequencing policy, while other L2s such as Optimism and Base, use a priority fee mechanism. This means Optimism chains capture more value from their activity because users and traders can pay the sequencer a higher fee to include their transactions, whereas on Arbitrum traders opportunistically spam the sequencer to get their transactions included, without having to pay higher fees. These dynamics are going to change once Timeboost goes live, and we expect Timeboost to generate additional annual revenue, ranging from $19M to $95M.

Lending Category

Lending had the highest number of LTIPP recipients (10), receiving a total of 3.717M ARB in aggregate (roughly ~12.13% of total LTIPP incentives). Most lending protocols distributed incentives via airdrops and/or LP incentives, however, incentive strategies for which types of activities or pools varied.

We looked at Aave, Alchemix, Compound Protocol, Gravita Protocol, Lumin Finance, Primex Finance, and Synonym Finance. We did not analyze Myso or Copra in our study because we were unable to pull the relevant metrics from their Dune dashboard per OBL’s guidelines or DefiLlama. While we analyzed Covenant, we did not use them in our analysis because they used LTIPP to bootstrap their protocol, and we cannot compare performance for protocols that did not have any metrics at the beginning of the program, as ARB incentives would have an outsized (infinite) impact on these protocols. therefore results would outperform the entire cohort. It would be an unfair comparison.

Methodology

For each protocol, we collected borrow TVL and supply TVL data from before and throughout the duration of LTIPP, calculated the growth in both metrics from the 30 day median before LTIPP to the median throughout LTIPP, derived a borrow-adjusted TVL metric, and normalized this metric by total ARB claimed. The equations applied in this analysis are detailed below.

Where:

is the supply growth,

is the supply growth, is the utilization ratio during the incentive period,

is the utilization ratio during the incentive period,  ,

, is the ratio before the incentive period,

is the ratio before the incentive period,  ,

, is the signum function, which returns +1 if

is the signum function, which returns +1 if  , -1 if

, -1 if  , and 0 if

, and 0 if  .

.

Where  is the amount of ARB claimed by the protocol.

is the amount of ARB claimed by the protocol.

While top-line TVL is an attractive vanity metric to optimize, the utilization of that capital is what ultimately determines the efficacy and success of lending protocols. We then ran a significance test on the normalized metric to deduce which protocols outperformed and underperformed, then reviewed their bi-weeklies to glean qualitative insights regarding what types of incentives strategies work and which ones do not.

Synonym Finance and Gravita fall in the lower 25% (Q1 - first quartile) of our data, growing normalized borrow-adjusted supply by 3.69% and -5.14%, respectively. This shows Gravita experienced negative growth. It’s important to note that, although Synonym Finance’s growth was modest, it was statistically significant according to the applied test. And in this context, the low and negative borrow-adjusted supply growth implies that these protocols saw a lower or decreased amount of usage over the period analyzed, potentially signaling that users are migrating away, incentives could have been allocated more effectively to boost utilization, or there are challenges impacting their usage such as market conditions or size.

Lumin Finance and Primex Finance fall in the higher 25% (Q3 - third quartile) of our data, indicating that they experienced significant positive utilization growth growth (745.66% and 443.92%, respectively). The high values suggest that they have seen a large increase in usage or adoption.

The box plot also shows a large spread between underperforming protocols and overperforming ones, indicating substantial variation in utilization growth across protocols in the dataset.

One could argue driving TVL (lending supply and borrowing demand) doesn’t necessarily indicate effective incentives spending because short term yield farmers can simply supply and borrow liquidity and earn risk-free ARB incentives (assuming the smart contracts are secure), and leave the ecosystem once yields dry up or become more attractive elsewhere. Another reason could be the impact of incentives are less likely to be significant for protocols that are more established and already have high utilization rates, absent meaningful adjustments to the protocol’s parameters.

For these cases, further research can analyze user activity and retention statistics, providing another layer of insight for the type of activity and breadth of growth. For example, Lampros was able to find the percentage of users who claimed and sold their ARB incentives. This type of analysis can glean insights on specific wallets behaviors and address questions such as what are borrowers doing with their capital.

Note: For lending, we are only analyzing the LTIPP data up until September 10th, as some protocols stopped updating their queries after this date. This may introduce a slight bias toward more positive results, since the analysis excludes the end of September.

Alchemix

Alchemix received 150k ARB and increased its supply by 230.53% during LTIPP when compared to the prior 30 days. Throughout this time the borrow to supply ratio decreased only from 40.05% to 38.56%. Borrow-adjusted supply growth for Alchemix was hence 221.98%. This sustained growth could indicate that supply level post-incentives stayed at a higher level than pre-incentives, although this has to be assessed on a later analysis.

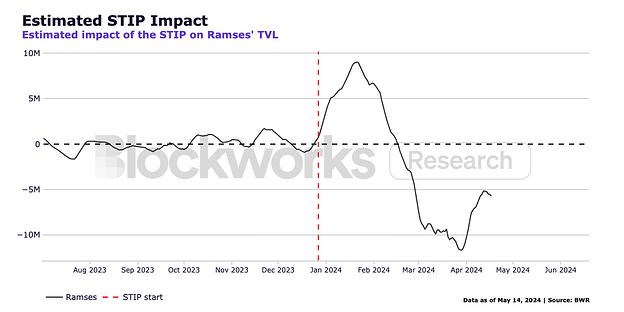

Alchemix planned primarily to incentivize depositors and LPs on Ramses Exchange. The protocol intended to allocate between 33% to 50% of the grant to enhance yields for depositors, thereby attracting more users to Alchemix on Arbitrum. The remainder of the grant was to be directed towards providing liquidity incentives specifically for alUSD and alETH LPers. Distribution of ARB was to be facilitated through direct incentivization on Ramses DEX, allowing LPers to claim their ARB directly from staking contracts. On the depositor side, Alchemix converted ARB into underlying assets to repay debt, although selling ARB is generally avoided unless it enhances the product’s usability or the grant’s effectiveness.

Taking a look at bi-weeklies, it appears that their success may be attributable to a focus on education, marketing, and collaborations. Due to their novel product (self repaying loans), they highlighted an effort to educate users and hosted Twitter spaces throughout the program, which seemed to have brought more liquidity to alAsset pools. They also collaborated with other DeFi protocols, including Gearbox, JonesDAO, and Layer3–two of whom also received LTIPP rewards. Collaborating with other protocols likely increased yields for users and thus attracted more capital. As you will see throughout this report, collaborations are a common thread among successful programs, which intuitively makes sense within the context of a rollup ecosystem.

Primex

Primex Finance received the 42k ARB–the lowest amount of incentives from LTIPP. The median borrow-adjusted supply growth for the analyzed period was 166.22%. Isolating supply growth, this represented an increase of 27.77% which then heavily expanded borrow TVL growth by 646.35%. The borrow to supply ratio increased from 1.9% before LTIPP to 11.11% during.

However, there was a sharp decline in borrow volume throughout September, so it is important to highlight that our analysis is only considering data until the 10th of September (after which date a few protocols, namely Alchemix and Lumin Finance did not provide more updated data). This means that it is possible that Primex would fall from its place as one of the top performers if the rest of the month had been included, indicating that borrow TVL was not sticky.

Primex focused on distributing ARB directly to users. The protocol earmarked 21,000 ARB (50%) for Primex Lenders with the aim of driving TVL growth, while the other 21,000 ARB (50%) was allocated to Primex Traders to stimulate volume growth. Additionally, Primex planned to launch an Achievement System designed to motivate both lenders and traders to engage in competitive activities. This system should enable participants to earn points based on their activity levels, allowing them to track their standings on a publicly accessible leaderboard, thereby fostering a more active user community.

Looking at biweeklies, Primex completed only three (out of seven) bi-weekly reports. While we were unable to gather much information from their bi-weeklies compared to other protocols, they wrote a blog post about their incentives program, in which the top 200 traders and top 200 lenders during six week periods were rewarded 14k ARB.

It is possible this type of incentives strategy creates conditions that attract short term opportunistic behavior, in which users opportunistically create ways of inflating activity to receive rewards. In particular, incentivizing participation through a highly gamified system with a public leaderboard has proven to be less effective for long-term user retention, as demonstrated by our prior research published on the forum as well as results for the perps category later in this report.

It seems as though the borrow growth was substantially larger than the supply growth, which could be attributed to the incentives strategy and the types of activities they induce, such as looping strategies to enhance yield farming returns. Perhaps due to this dynamic, ultimately borrow TVL was not sustained.

Synonym Finance

Synonym Finance received a grant size of 500k ARB, and exhibited a characteristic pattern of a short-term boost driven by incentives. During the analyzed period, there was a median supply growth of 16.88% and a borrow growth of 43.27%, making for a 20.69% borrow-adjusted supply growth. While the increase is significant, it ranks among the lower performers due to its relatively modest growth compared to other lending protocols, despite receiving a substantial allocation of 400k ARB. This large allocation reduces its borrow-adjusted supply growth when normalized by incentives.

In its application, Synonym planned to combine its ARB incentives with the existing SYNO emissions according to its pre-established incentives schedule. The protocol focused its bonus ARB incentives on borrowers to encourage increased activity and efficient capital utilization. A total of 400K ARB was planned for deployment alongside SYNO emissions, with 60% allocated to borrowers and 40% to depositors. These incentives would be implemented across various markets, including USDC, ARB, and forthcoming LRT markets, with weekly epochs to monitor their effectiveness.

Looking at biweeklies, nothing stands out. While it is clear that ARB incentives drive activity and growth, it does not necessarily mean the activity and growth is sticky. Protocols have to maintain activity and growth through other means such as having a great product, being highly engaged with the community, or integrating with other protocols. We were unable to observe qualitative factors due to the lack of information in biweeklies. This lack of participation or following guidelines laid out by LTIPP speaks to a broader implication for the DAO on how it can hold protocols receiving ARB incentives accountable to reporting requirements.

Gravita

Gravita is the only lending protocol where we saw a decline in borrow-adjusted supply. Supply TVL decreased 25.97% during LTIPP and borrow-to-supply ratio also decreased slightly, making a total decrease of 28.29% in borrow adjusted supply. Gravita’s borrow and supply TVL gradually decreasing throughout the program is very unusual.

Gravita has outlined several incentivization mechanisms aimed at enhancing liquidity provisioning and increasing demand for its GRAI token. The protocol planned to provide competitive returns to liquidity providers and create more utility for GRAI by onboarding lending platforms and partnering with strategic platforms.

Looking at biweeklies and reviewing the documentation, the incentives program appears to be more complex or cumbersome than most, and also involves purchasing vesting ARB (goARB), from a minimum of 20% discount to a maximum of 100% after a 40 week vesting period. In other words, users can acquire ARB at zero cost if it’s fully vested over 40 weeks, or users can acquire ARB instantly by paying a discount for it, from which the discount vests linearly. Given the additional friction from the incentive program, users likely flock to other lending protocols that offer similar yields and less complexity, and could impact users’ propensity to participate since it is possible users prefer receiving ARB for using the protocol.

It is worth noting that Gravita’s strategy using the goARB model has faced challenges, as evidenced by Umami’s previous unsuccessful attempt to implement a similar strategy during the STIP, which ultimately led to a shift in their approach. This had already been identified in our STIP retroactive analysis.

In phase 6, they terminated the program and noted “incentives are not sufficient to fix a “broken” product,” while in the following phase they wrote, “overall a success in terms of expanding user base.” Based on data in our results, evaluating these mixed signals from the bi-weeklies is not straightforward.

DEX Category

For the DEX category, we looked at Clipper, DODO, Gyroscope, Integral, Uniswap, and PancakeSwap. We did not analyze Poolside or Symbiosis in our study because we were unable to pull the relevant metrics from their Dune dashboard per OBL’s guidelines or DefiLlama. Some protocols exclusively showed incentivized pools in their Dune dashboard. However, for others, we needed to source data from DefiLlama. To maintain consistency and fairness across all protocols, we ultimately decided to rely on DefiLlama data for our analysis of this vertical, as comparing growth metrics from only incentivized pools against overall metrics would not provide a fair assessment.

Methodology

For each protocol, we collected trading volume and TVL data from before and throughout the duration of LTIPP, calculated the growth in both metrics from the 30 day median before LTIPP to the median throughout LTIPP, derived a volume-adjusted TVL metric, and normalized this metric by total ARB claimed. The equations applied in this analysis are detailed below.

Where:

is the supply growth,

is the supply growth, is the volume-to-tvl ratio during the incentive period,

is the volume-to-tvl ratio during the incentive period,  ,

, is the volume-to-tvl ratio before the incentive period,

is the volume-to-tvl ratio before the incentive period,  ,

, is the signum function, which returns +1 if

is the signum function, which returns +1 if  , -1 if

, -1 if  , and 0 if

, and 0 if  .

.

Where  is the amount of ARB claimed by the protocol.

is the amount of ARB claimed by the protocol.

We then ran a significance test on the normalized metric to deduce which protocols outperformed and underperformed and then reviewed their bi-weeklies to glean qualitative insights regarding what types of incentives strategies work and which ones do not.

Sustained TVL growth that is not backed by volume can often lack significance, as it may quickly diminish once incentives are withdrawn. In DEXs, the primary goal of incentivizing liquidity is to create a flywheel effect that attracts more users - both traders and liquidity providers - due to enhanced protocol performance. Ideally, liquidity incentives initially draw in more LPs, boosting TVL, which in turn attracts traders seeking improved price execution. Increased trading activity generates higher fees, benefiting LPs through greater yields. Ultimately, while some liquidity may leave when incentives are removed, a portion should remain due to the enhanced yields, resulting in both elevated TVL and volume levels compared to pre-incentive metrics. This is why we developed the volume-adjusted TVL growth metric, which penalizes TVL growth when the volume-to-TVL ratio decreases and amplifies growth when the ratio increases.

When measuring the impact of claimed ARB amounts on volume-adjusted TVL for the DEX cohort, Gyroscope, Pancakeswap, Integral, and Clipper had significant results. Gyroscope was the best performer, followed by Clipper, Integral and Pancakeswap were at the median, and Dodo had the worst performance, followed by Uniswap. Gyroscope stands out as an outlier amongst the participant DEXs.

Uniswap and DODO fall in the lower 25% (Q1 - first quartile) of our data, growing normalized volume-adjusted TVL by 0.65% and -0.14%, respectively. This shows DODO experienced negative growth. Additionally, Uniswap’s growth was not deemed statistically significant based on the applied test. The absent and negative volume-adjusted TVL growth implies that these protocols saw a stable or decreased amount of usage over the period analyzed, potentially signaling that users are migrating away, incentives could have been allocated more effectively to boost utilization, or there are challenges impacting their usage such as market conditions or size.

Gyroscope and Clipper fall in the higher 25% (Q3 - third quartile) of our data, indicating that they experienced significant positive growth (173.07% and 20.81%, respectively). The high values suggest that they have seen a large increase in usage or adoption.

The box plot reveals that Gyroscope stands out as a clear outlier, exhibiting substantial growth compared to the rest of the group.

Gyroscope

Gyroscope received 100k ARB–the least amount of ARB incentives in the DEX category. Initial TVL and volume levels were very low compared to others in the vertical, enabling fruitful conditions for a positive flywheel could take place (e.g. liquidity mining attracts LPs, which improves trade execution and thus increases trade volume, which in turn leads to more fees for LPs, increasing their return on capital, which should naturally attract more LPs). The goal would be to reach an equilibrium where even when liquidity mining incentives are removed, the new equilibrium between liquidity and trade volume is higher, thus maintaining LPs.

Median TVL on Gyroscope grew by $13.5M, $1.4M more than Uniswap’s TVL increase, which amounts to a 10,715.10% growth. Volume increased by 773.42%, resulting in a 90% decrease in the volume-to-TVL ratio. Our volume-adjusted TVL growth metric of 865.35% reflects a penalization of TVL growth due to the disproportionate increase in TVL that was not sustained by trading volume.

Gyroscope has laid plans to use its 100,000 ARB grant to incentivize liquidity pools (E-CLPs) in two main categories: 33.33% of the ARB would be allocated to LST E-CLPs (~33,000 ARB), and 66.67% would go to stablecoin E-CLPs (~67,000 ARB), distributed weekly. The goal is to enhance liquidity provision for both stablecoin and LST pools, including upcoming pools like GYD/USDC and GYD/DAI.

The funds would be used to incentivize LPs, boost capital efficiency with new, concentrated liquidity pools, and promote long-term liquidity stickiness by minimizing liquidity withdrawal friction. Gyroscope also introduced a points system to reward LPs and create a liquidity network using GYD as a settlement asset to generate organic yield for LPs. Additionally, they plan to build trust with the GYD stablecoin by promoting its automated risk-control features.

Looking at biweeklies, Gyroscope also received additional rewards and subsequent TVL from other programs such as Aave. This likely is why it was the best performer and outlier. While this result could be considered an unfair evaluation–normalizing for multiple incentive programs might provide more balanced results–due to the composable nature of DeFi, it is worth highlighting how significant collaboration or composability is for incentive programs. Instead of discounting partnerships, highlighting partnerships captures a protocol’s business development efforts and product. In addition, protocols that worked together or had partnerships during LTIPP, tended to produce more successful results (e.g. Alchemix).

It is also worth noting that ARB rewards were also used to obtain vlAURA votes (i.e. a mechanism by which users can bribe the protocol to direct incentives to specific pools). This translated to higher incentives for LPs due to Balancer’s vote matching, making specific Gyroscope pools even more attractive places to LP. Again, this clever mechanism and strategy speaks to the power of composability and the ability for protocols to enter mutually beneficial partnerships.

Clipper

Clipper received 174.308k ARB–the second smallest allocation amongst DEXs. Clipper’s TVL increased from around $1M to $1.4M during the program, a significant increase of 42.66%. Interestingly, Clipper’s daily volume increased more than TVL, namely by 264.8%. This represents an increase in volume to TVL ratio of almost 100%, from 7.26% to 18.56%.

This sustained increase in volume alongside TVL growth could generate stickier liquidity and healthier metrics for the protocol in the long run. The LTIPP provided a volume-adjusted TVL growth of 109.1%.

According to their application, the goal of the program was to grow the number of LPs and TVL by specifically targeting new LPs and new TVL. They incentivized migrating from other chains to Arbitrum and pro-rated the allocation the earlier LPs moved positions.

Looking at biweeklies, Clipper highlighted interviewing LPs about their experience to create a more compelling incentives structure. This level of attention to detail and their users was not common among observed LTIPP recipients, at least according to bi-weekly reporting. One of the pieces of feedback they received was allocating more incentives to LPs from referrals, as the efficacy of referrals is unclear, because defending against sybil with referrals is not straightforward, and referrals do not necessarily garner users who are genuinely interested in the protocol. This observation was highlighted here and in other programs’ bi-weeklies.

Aside from interviewing LPs, we could not identify any outstanding or unique strategies. Rather the success of Clipper’s program can be attributable to basic factors, including having a clear case for incentives (i.e. a new novel product), having a clear direction for incentives (LPs), having a nimble approach to adjusting the incentives strategy (gaining feedback and making adjustments), and having a product that users (LPs) actually value. This perhaps speaks to the idea that simple strategies with good products are good strategies.

In fact, Clipper is a DEX that uses novel rebalancing strategies to generate higher returns for LPs. As such, arguably, Clipper is better positioned to take advantage of the aforementioned flywheel effect. Moreover, this potentially points to a broader discussion regarding allocating ARB incentives to DEXs, that the DAO should allocate incentives to DEXs that increase LP returns.

Dodo

Dodo received 350k ARB. Both TVL and volume declined sharply by the end of the program, reverting back to initial levels and nullifying the growth. Initial TVL was quite low, so a larger growth conducive of a positive flywheel effect could have taken place. When considering median values before and for the full duration of the program, no growth was observed; in fact, we noticed a slight decrease of -2.41% in TVL and a slight increase of 2.09% in volume. For the initial level of volume and TVL, the ARB allocation received was disproportionately large when compared to other protocols in the vertical. When incentive allocation is disproportionately large relative to the size of the protocol, we’d expect incentives to drive disproportionately more volume and TVL, like shown in protocols that grew a lot from zero or low TVL. This suggests either there is additional activity that we have not accounted for (such as spending incentives on another protocol), or incentive allocation was particularly inefficient.

The stated objectives from their application was (1) to enhance liquidity, minimize, slippage, and boost market-making activities for stablecoins and (2) to accelerate bridging assets to Arbitrum and elevate Arbitrum’s onchain trading experience. To do so, there were four strategies: (1) boost TVL and trading volume through higher APR, (2) establish partnerships and reward addresses trading specific tokens, (3) incentive innovative projects and drive liquidity for new assets, and (4) create a dPoints System to facilitate ARB distributions.

Looking at biweeklies, the bulk of Dodo’s incentives were allocated to solvBTC and liquid restaking tokens (weETH and ezETH). There was limited information regarding the choices for these decisions and adjustments throughout the program. Each of these pools is associated with relatively new assets (as planned). However, it is possible new assets are less palatable for LPs, if the rewards do not justify the additional exposure to newer assets. For what it’s worth, Pancakeswap added a solvBTC pool as part of their incentives strategy but quickly noted that growth in that pool was much slower than other pools and decided to shut the incentives off.

In addition, from an LP’s perspective, it is more profitable to LP pools that are also generating swap fees in addition to ARB incentives. Thus, if the pool doesn’t have sufficient liquidity or a mechanism that improves LP performance, unless incentives can pay for enough additional liquidity that tightens spread to a competitive level, then it is unlikely incentives will generate trade volume and thus additional fees to offset the risk of passively making that market. In other words, if a pool still cannot compete with existing pools, it is likely that another pool is a more effective allocation of incentives. See Gauntlet’s incentives strategy for allocating incentives to drive better price execution and thus more trading activity on specific Uniswap pools.

Moreover, when evaluating incentives for DEXs, it is important to consider the AMMs design and the amount of incentives that would drive more competition. It is possible that having a more opinionative stance on DEXs and liquidity within the Arbitrum ecosystem can generate more positive results.

Uniswap

Uniswap received 1 million ARB, more than double the allocation of the next highest recipient. Given that Uniswap had the largest TVL in the group, it is expected that its growth would be relatively modest. This is due to the proven phenomenon of diminishing returns on additional liquidity (e.g. here) and its impact on trading volume for several reasons.

First, when liquidity is already abundant, price execution is likely highly efficient compared to other onchain liquidity sources, leaving little room for improvement. Additionally, a portion of trades are often routed sub-optimally due to factors such as traders’ personal preferences, differences in user interfaces, or protocol fees. Lastly, adding liquidity to long tail assets does not necessarily imply more activity, unless, for example, price discovery for the token is outside of Arbitrum, or the underlying fundamentals for the token create conditions in which users have a reason to transact. As a result, even if enhanced price execution theoretically leads to more trades being routed to a specific exchange, the trading activity and volume depends on other factors, which can potentially limit total routable volume.

As a whole, median TVL grew by $12.1M or 4.35%, a non-significant amount when comparing the month before LTIPP to during the program. This does not mean that individual pools did not perform well. Gauntlet shared some initial results that look positive for the incentivization of a select group on Uniswap pools on Arbitrum.

1M ARB allocation is, again, more than double the next-highest allocation, so this penalizes our efficiency-focused metric. Volume to TVL ratio decreased slightly during the program, from 96.00% in the month before to 85.94% For a complete breakdown of Uniswap’s (previous) incentive program, see here. This should provide context for how Gauntlet distributes ARB incentives. They deploy a sophisticated strategy that optimizes for improving pools where more liquidity would yield better price execution.

However, while these results are promising at a protocol specific level (i.e. ARB incentives were able to effectively raise TVL in specific Uniswap pools), in comparison to other DEX protocols, the impact of ARB incentives on Uniswap were not significant. Intuitively, this makes sense since the larger a protocol is, the more diminishing returns are present i.e. 1 ARB has a larger impact on smaller protocols.

Furthermore, it is also intuitive that protocols like Uniswap that already have strong PMF and significant cash flows arguably do not fall into the category of protocols that need ARB incentives. Simply put, spending ARB on protocols that have higher ROI are a much more effective way to generate successful results for an incentive program.

Another takeaway is, our methodology does not offer a fair comparison for larger protocols like Uniswap, and future incentive programs and analysis should differentiate between newer protocols and those with PMF. This differentiation could boil down to amount received, the type of support from the DAO, goals, or impact on the ecosystem.

Perps Category

For the perps category, we looked at Aark, LOGX, APX Finance, CVI Finance, and Okto. We did not analyze Synthetix because it appears they only used ARB rewards to bootstrap collateral in vaults and plan to go live with trading after LTIPP. Audits for the multi collateral contracts were delayed for months, so trading never went live while LPs were issued incentives to stay. LTIPP ended and they launched trading right after, and filed for an extension to use the remaining ARB but got denied. Hence, we excluded them from our study, and we urge the DAO to be more thorough about incentives, as it does not make that much sense to allocate incentives to protocols that aren’t ready for them. Although Pear Protocol ran a program according to their bi-weeklies, we did not include them in our analysis because we could not find their Dune dashboard per OBL’s guidelines or pull their data from DefiLlama. We are happy to add the data to the study if it is presented.

Methodology

For each protocol, we collected trading volume data from before and throughout the duration of LTIPP, calculated the growth in volume from the 30 day median before LTIPP to the median throughout LTIPP, and normalized this metric by total ARB claimed.

Where  is the amount of ARB claimed by the protocol.

is the amount of ARB claimed by the protocol.

We then ran a significance test to deduce which protocols outperformed and underperformed and then reviewed their bi-weeklies to glean qualitative insights regarding what types of incentives strategies work and which ones do not.

When measuring the impact of claimed ARB amounts on volume for the perps cohort, APX Finance, CVI Finance, Okto and Contango all had a significant increase in trading volume. APX Finance was the best performer, followed by CVI Finance. Aark and LOGX were the worst performers according to volume growth normalized by ARB allocation.

LOGX and Aark fall in the lower 25% (Q1 - first quartile) of our data, with normalized volume having decreased by 9.7% and 10.39%, respectively. This shows that both LOGX and Aark experienced negative growth, implying that these protocols saw a decreased amount of usage over the period analyzed, potentially signaling that users are migrating away, incentives could have been allocated more effectively to boost utilization, or there are challenges impacting their usage such as market conditions or size.

APX and CVI Finance fall in the higher 25% (Q3 - third quartile) of our data, indicating that they experienced significant positive growth (47.47% and 31.31%, respectively). The high values suggest that they have seen a large increase in usage or adoption.

The box plot also shows a large spread between underperforming protocols and overperforming ones, indicating substantial variation in volume growth across protocols in the dataset.

APX

APX Finance received 525k ARB. The median daily volume observed was $22.5M, compared to $6M in the month before the program, an increase of 271.55% during LTIPP.

Although APX was a top performer, the sharp decline in volume during the final month of the program signals potentially unsustainable user behavior. This aligns with the earlier market share analysis, which suggests that LTIPP had minimal impact on the perps vertical.

According to their application, the focus of the program was to expand multichain liquidity between BSC and Arbitrum. They planned to distribute 225k ARB to cross-chain ALPs and LPs, targeting a 5x to $5.1M from $1.02M for Arbitrum ALPs, by the end of LTIPP. They also did grant matching to encourage new traders.

Looking at biweeklies, they focused rewards on LP incentives and trading activity, and gave additional rewards for completing Galxe quests and trading activity/LPing on Pancakeswap–another instance in which a top performer leveraged collaborations. They also hosted a lottery in which one lucky trader wins 5k ARB each week. It is also worth noting that when users staked their ALP tokens, they received ARB rewards from APX and Pancakeswap. Thus, yields were higher relative to other protocols, which might contribute to their outperformance.

They also focused on marketing throughout the program, and highlighting frequent communication was necessary to keep interested users. This is something other top performers (like Alchemix) in LTIPP also noted.

CVI

CVI Finance received 125k ARB, and median trading volume grew by 46.77%, when comparing the month before LTIPP to the months during the program. In line with APX, the decline of trading volume towards the end of the program is noticeable.

The stated objectives from their application are: (1) encourage trading activity, (2) amplify liquidity and market stability, and (3) foster widespread trading engagement. To do so,the plan is to dedicate a portion of incentives to LPs, a portion to trading competitions, a portion to trading rebates, and the remainder to ARB raffles based on trading volume.

Looking at biweeklies, they focused rewards on LP incentives. They even reduced the level of rebates to increase rewards for LPs to reduce their risk in volatility and incorporated trading competitions to boost activity and raffles. There was limited information from bi-weeklies.

Aark

Aark received 900k ARB, and when comparing the median daily trading volume of the month before LTIPP and the months during LTIPP, there was a 61.88% decrease. This does not necessarily mean that incentives caused a decrease in the protocol’s volume, but rather that it wasn’t effective in preventing it.

For the protocols in the perps category that we analyzed, Aark received a very large allocation of 900k ARB, which contributed to its place in the bottom performers in terms of volume growth normalized by allocation.

The stated objectives from their application are (1) onboard non-Arbitrum users onto Aark, (2) focus rewards and retention campaigns on non-Arbitrum users, and (3) grow TVL via LSTs, LRTs, and RWAs. To do so, it involved using an airdrop quest to acquire users and offering reduced fees to traders on Aark. They incentivized LPing assets unique to Aark, such as GM tokens, LSTs, singled-side ARB, BTC, and ETH. They also planned to incentivize affiliates to build a network of non-Arbitrum users through referrals.

Looking at biweeklies, they quickly recognized some sybil behavior from their referral program, and decided to reallocate incentives to trading. They also adjusted their new user acquisition strategy and partnered with Stakestone to strengthen LP incentives. It appears Aark was very active in tweaking their strategy to optimize outcomes–adjustments were a common theme in their biweeklies. Oddly, the results did not improve. It is likely the underperformance can be attributable to shortcoming in the perps category. Even outperformers lose activity right once incentives are completely distributed.

Interestingly, it appears volume was steadily increasing into April. This can be explained by users farming $AARK leading into the protocol’s TGE which was delayed to June after initially being slated to go live on April 1st. It is possible the expectation return from AARK’s TGE exceeded the expected return from ARB incentives.

LOGX

LogX received 395k ARB and volume decreased by 54.28% during LTIPP when compared with the month before. Volume had been steadily decreasing since April, and the incentives from LTIPP weren’t able to stop this trend.

According to their application, LogX planned to use ARB incentives to acquire more CEX traders, increase trader engagement, and drive growth via referral programs. To retain and engage these traders, they plan weekly competitions, fee rebates, and bonuses. They also planned to incentivize referrals and affiliates.

Looking at biweeklies, they made adjustments to their original ARB grant usage to focus on trading competition and trading fee rebates. They also added $LOGX rewards to the top trader on the leaderboard and incentivized affiliate partners on a custom based deal basis. In mid-August, they ran a massive $100k ARB and 60k LOGX trading campaign to reward traders on the leaderboard. However, volume remained spiky and continued to decline over the duration of LTIPP.

All in all, it appears the perps category is a challenging category for incentives. Some protocols actually saw a decrease in trading volume, and for the top performers that experienced a significant increase, these gains quickly reverted once incentives finished. Incentive strategies for perp exchanges are fairly consistent, e.g. fee rebates, trading competitions, referral programs, and trading volume, however, results are consistently ineffective, especially when considering post-incentives activity. Intuitively, if all perps protocols are offering fee rebates, trading competitions, and referral programs, then users will use the one that is offering the highest reward at the cheapest cost.

The DAO should consider more rigorous analysis on how to allocate ARB incentives to perps exchanges, because based on our methodology, it appears doing so is not an effective spend of capital. Perp protocols should consider investigating different incentives strategies–simply attracting new traders via fee rebates, trading competitions, and referrals oftentimes may have positive short term effects but seldom sustains it. Most of the time this activity quickly reverts once incentives have been distributed.

Conclusion

LTIPP had a positive impact on the Arbitrum ecosystem, broadly speaking. However, there are ways to improve the effectiveness of incentive programs on a per ARB (or USD) basis. Here is a list of observations that we believe the DAO can consider for upcoming incentives programs.

- Protocols that formed partnerships during LTIPP generally saw better results. This could be further explored by allocating incentives to protocols that are composable and strengthen the set of existing protocols in the Arbitrum ecosystem, or to programs that explicitly target collaborations and leverage partnerships to more effectively enhance ecosystem-wide metrics. The DAO (or committee running the program) could take more lead on thinking more critically about which protocols are mutually beneficial.

- For the DEX category, protocols that enhance LP experience did really well. Incentives should be allocated to protocols that enhance LP returns because the flywheel is more pronounced per ARB spent when a DEX is more capital-efficient and beneficial to LPs.

- Further, DEXs should prioritize pools based on total revenue potential, including additional swap volume per unit of liquidity. Inefficient pools, or long tail (newer) assets, should be avoided in favor of better options. There may be value in DEX protocols funding research/advisory to help individual protocols optimize incentive allocation, similar to Uniswap’s partnership with Gauntlet.

- Effective marketing, frequent communication, and responsiveness to user feedback are key. The DAO (or committee running the program) should take some lead on promoting LTIPP recipients’ applications and incentive strategies and fostering more engagement.

- LTIPP significantly increased DEX activity on Arbitrum, and it appears the program saved sequencer revenue from its downward spiral. Hence, if the DAO can expect incentives to boost spot trading activity, then it is prudent for the DAO to focus incentives programs after Timeboost goes live. The main implications of this are Timeboost is expected to increase sequencer revenues for the DAO and increased activity will generate more insights about Timeboost and its impact on users.

- LTIPP did not have an impact on Arbitrum’s perps ecosystem, more broadly, and based on protocol-specific results, incentives were largely ineffective and/or unsustainable. It’s possible that strategies (e.g. fee rebates, trading competitions, referral programs, and trading activity) for perps are easier to game (e.g. users can wash trade, trade delta neutral positions, find loopholes in referral programs, etc). A more thorough analysis of the perps category would be useful for determining how the DAO should think about allocating ARB incentives to perps protocols.

- Complex incentives that involve purchasing vesting ARB (goARB, example from Gravita) can result in additional friction and make users opt for protocols that offer similar features and less complexity.

Further Research Directions

As there are ongoing discussions about establishing an incentives group for future programs, this analysis is meant to be a checkpoint for thinking about best (and worst) practices from LTIPP. We look forward to more in-depth analysis from PYOR and Lampros.

As aforementioned, we were unable to scale a way to monitor ARB distributions after protocols initially claimed their distributions within the remainder of our first ARDC engagement. It would be prudent and potentially very valuable to track and analyze exactly how protocols distributed ARB. To get ahead of this problem in future incentives programs, further work should be done to standardize reporting requirements to collect the necessary information so that the DAO can easily monitor activity.

In addition, the analysis would benefit from incorporating a few weeks of post-incentive data to assess not only the efficiency of the incentives during the program but also their lasting impact. Comparing the key metrics one month before and one month after the incentive period would provide valuable insights into the sustainability of the growth.

It is also worth noting the difference between allocating incentives to DEXs vs perps. Namely, it appears spending ARB on DEXs has a higher ROI than spending on perps. Furthermore, we encourage the DAO to learn into DEXs. This might include more thorough analysis on DEX designs and developing frameworks for deducing to which pools to allocate, especially as Timeboost goes live which should increase arbitrage activity across DEXs.

Appendix

Methodology

Given the non-uniform L1 LTIPP data and the limited time left in the ARDC v1, we split LTIPP recipients into cohorts by sector and will focus our analysis on the top 3 sectors (by number of recipients): Lending (10), DEX (8), and Perps (8). These sectors that have the most participants are among the most important drivers for Arbitrum’s growth.

First, we aggregate incentives by sector and compare each sector’s market share against other chains and L2 ecosystems to measure the effectiveness of LTIPP more broadly. Before diving into LTIPP at the protocol level, we want to illustrate the impact of LTIPP on Arbitrum’s market share of important categories. We also review LTIPP’s impact on sequencer revenue. For each metric described in the results, we normalize by market share to avoid any distortion from broader market dynamics. For example, in the case of DEXs, we determine the added TVL by multiplying the increase in market share by the total DEX TVL, allowing us to quantify the impact of the program independently of overall market fluctuations. This has been done in previous analyses, e.g. here. We calculate Arbitrum’s L2 revenue market share by considering its sequencer fees amongst those of other major L2s (Base, Blast, Linea, Optimism, Polygon Zkevm, Scroll, Zksync Era, Zora). We then compare the change in market share before and during the LTIPP program, and multiply this difference by total fees to get the added revenue, normalized by market share. To assess the impact per dollar spent on incentives, we use the ARB price of $1.13 on June 2nd, 2024, the program’s start date.

Then, we collect protocol level metrics as prescribed by OpenBlock Labs and overlay each phase of ARB claims on charts to illustrate basic visualizations of the impact of incentives on protocol level growth metrics for each protocol. We did not track subsequent ARB distributions for each phase because protocols deployed different strategies for distributing incentives. Following flows for each protocol was not scalable within the time frame of our analysis.

For our analysis, we calculate the 30-day median before LTIPP and the median throughout LTIPP for each protocol level metric. We perform a significance test (Mann-Whitney U-Test) to make sure any observable differences when comparing the period before and during are statistically significant.

We then normalized metrics by the log of total ARB claimed. We decided to use claim amounts for a couple of reasons. One, protocols distributed ARB very differently, thus making it more challenging to both scale tracking ARB and apply a consistent methodology for each protocol. And two, normalizing growth by claim amount, we are able to provide a lower bound for how each protocol performed, because if we assume protocols spent all the ARB they were granted, then protocols that did not spend all the ARB would have more effective results. Further analysis, that is able to precisely track the ARB spent, could enhance the findings from this analysis and also detect any misuse of funds (like in previous programs), both of which would be valuable to the DAO.

We take the distribution of protocol metric growth by category and make a boxplot to check for outperformers and underperformers. Then, we can select the protocols that are outliers, in the first and third quartiles (i.e. the bottom 25% and top 75%), and review their bi-weekly updates to observe qualitative factors that contributed to their performance.

References

Results and Analysis: Uniswap Arbitrum Liquidity Mining Program

STIP Analysis of Operations and Incentive Mechanisms

STIP Retroactive Analysis – Perp DEX Volume

Timeboost Revenue and LP Impact Analysis

https://x.com/gauntlet_xyz/status/1839294330911207782