Introduction

Introduction

This document presents the midterm report of ArbitrumDAO’s Delegate Incentive Program (DIP), managed by SEEDGov in its role as Program Manager. It covers the period from November 2024 to April 2025 and includes both quantitative and qualitative analysis of the program’s performance, as well as the updates implemented, the accountability mechanisms used, and the observed results in terms of delegate participation.

Six months into its implementation, DIP v1.6 has enabled the collection of a robust dataset on how incentives operate within the governance ecosystem. This report aims to systematize that information, identify relevant patterns, and offer a comprehensive overview of how the program has evolved within a broader context of DAO-wide changes.

While this document does not specify the proposed changes, it is also intended to inform future collective reflections on how to continue strengthening the alignment between incentives, effective participation, and the DAO’s strategic needs.

Executive Summary

DIP v1.6 aimed to improve the efficiency of the delegate incentive program while introducing more precise evaluation and accountability mechanisms. Over the last three months, the program saw substantial improvements in spending control, a more targeted use of incentives, and a reduction in the spread of low-impact contributions. There was also sustained—and in some cases growing—participation from high Voting Power delegates, which was crucial to meeting quorum requirements.

At the same time, the analysis highlights some persistent challenges: increasing concentration of Voting Power, dependence on a small number of delegates to reach quorum, and signs of disincentivization among profiles with low or medium voting power. Additionally, some metrics suggest the need to further evaluate the relationship between effort, impact, and compensation.

Notably, a significant portion of the incentives distributed to low-VP profiles stem from activities not directly related to voting—such as Delegates’ Feedback or Bonus Points. While this reflects meaningful qualitative contributions, it also raises concerns regarding proportionality and efficiency in the current model. Although these contributions enrich DAO discussions and community building, their assessment relies on subjective criteria that require careful interpretation by the Program Managers.

In this regard, the section on disputes shows that an increasing share of monthly operational work has focused on resolving disagreements related to these assessments. While this process has yielded valuable lessons, given that DIP is inherently iterative, it has also incurred time and energy costs for both delegates and the implementation team.

Taken together, these findings not only highlight the progress made so far but also provide useful elements to guide future discussions around the program’s design and objectives. This report, therefore, aims to bring clarity, promote transparency, and contribute to a more informed conversation about the path forward for the DIP within the ArbitrumDAO ecosystem.

TL;DR

- Participation has remained steady, with high-VP delegates playing a key role in reaching quorum.

- DAO activity decreases: fewer proposals reaching Snapshot and Tally.

- Spending efficiency improved significantly after the 1.6 updates.

- Smaller delegates continue contributing through DF and Bonus Points, though at a higher cost.

- Disputes over subjective scoring have increased and are impacting program bandwidth.

- Security Council elections saw record VP participation after being tied to DIP rewards.

- New version incoming

1. KEY METRICS OVERVIEW AND ANALYSIS

1. Key Metrics Overview and Analysis

1. Key Metrics Overview and Analysis

Voting History

The following analysis covers all voting activity from the inception of the DAO in early 2023 through April 30, 2025.

Note: For the purpose of assigning proposals to a given date range, the proposal’s end date is used.

Note 2: Test and cancelled proposals are excluded.

- Snapshot: 363 total proposals, averaging 14.52 proposals per month (25 months operational).

- 131 proposals in 2023, averaging 14.5 proposals per month (9 months operational)

- 212 proposals in 2024, averaging 17.67 (+21.86% YoY) proposals per month.

- 20 proposals in 2025, averaging 5 (-71.70% YoY) proposals per month (4 months operational)

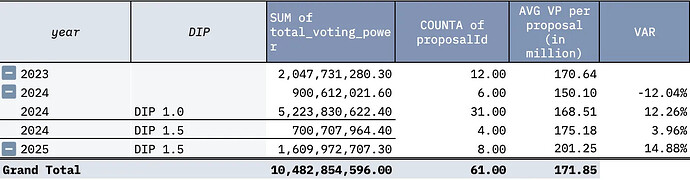

- Tally: 61 total proposals, averaging 2.44 proposals per month (25 months operational).

- 12 proposals in 2023, averaging 1.33 proposals per month (9 months operational).

- 41 proposals in 2024, averaging 3.42 (+157.14% YoY) proposals per month.

- 8 proposals in 2025, averaging 2 (-41.52% YoY) proposals per month. (4 months operational)

The trend appears to have shifted, particularly in the final months of 2024 and early 2025. While it’s important to note that Snapshot activity in 2024 was boosted by voting on STIP.B (18 proposals) and LTIPP (91 proposals), even when excluding those, the average remains slightly over 8 proposals per month—still well above the 5 proposals per month seen in the first 4 months of 2025. This reflects an adjusted 37.5% drop in Snapshot activity relative to 2024.

In terms of Tally proposals, comparing the first four months of 2024 to the same period in 2025 (for a more accurate seasonal comparison), there were 12 vs. 8 proposals respectively, showing a decline from 3 to 2 proposals per month (-33.33% YoY).

This contrast in activity may be attributed to several structural changes within the DAO. Throughout 2024, ArbitrumDAO continued its institutionalization process by forming various committees and working groups, such as Arbitrum Gaming Ventures, STEP, ADPC, MSS, and the Questbook Domain Allocators, tasked with making strategic decisions on behalf of the DAO across different verticals. This new governance architecture introduced a more structured filtering process, reducing the number of proposals that reach the forum and, ultimately, a vote (whether offchain or onchain).

Additionally, the DAO funded Entropy, a service provider whose explicit mandate is to accelerate and professionalize governance. Among other responsibilities, Entropy reviews, refines, and channels proposals before they are published in the forum or reach a Snapshot vote. In this sense, the DAO has delegated a significant portion of its operational governance work to specialized contributors, prioritizing efficiency and quality over volume.

It’s also worth noting that, starting in 2025, both Arbitrum Foundation and Offchain Labs have taken on a more active role, more openly communicating their opinions, preferences, and alignment around proposals. This increase in “institutional clarity” may also be contributing to the drop in proposal volume by offering more defined direction from the early stages of each governance process.

Taken together, these changes reflect an evolution in how ArbitrumDAO operates: a shift from a purely open, participatory governance model to one that is more structured, strategic, and curated.

Finally, it’s important to stress that a higher number of proposals does not necessarily equate to higher quality. In fact, this reduction in proposal volume may be interpreted as a sign of maturity, evidence that the DAO is optimizing its processes, improving the quality of its signal filtering, and focusing on fewer but higher-impact decisions.

Delegates Participation

Number of voters

The following data have been extracted from Snapshot and Tally.

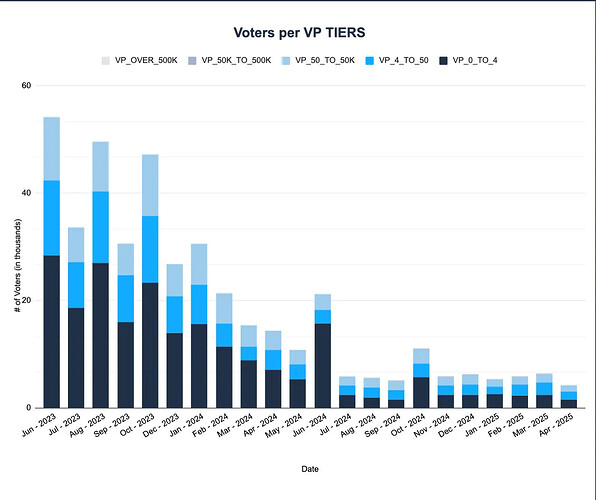

Snapshot:

- In 2023: 3,380,028 votes were cast on 131 proposals.

- Average: 25,801 votes per proposal.

- In 2024: 2,100,739 votes were cast on 212 proposals.

- Average: 9,909.15 votes per proposal. (-61.6% yoy)

- In 2025: 68,284 votes were cast on 20 Proposals

- Average: 3,414.20 votes per proposal. (-65.55% yoy)

Tally:

- Until 2023: 390,775 votes were executed on 12 Proposals.

- Average: 32,564.58 votes per proposal.

- Until 2024: 322,891 votes were cast on 41 Proposals.

- Average: 7,875.39 votes per proposal. (-75.82% yoy)

- Until 2025: 38,309 votes were cast on 8 Proposals.

- Average: 4,788.63 votes per proposal. (-39.20% yoy)

It is important to note that, although the numbers are high, approximately 40% of the voters have between 0 and 4 ARB of VP (will expand on this below). This suggests that they could be bots or “farmers”, although there is no certainty in this regard.

Voting Power Cast

From the beginning of the DAO until April of 2025, we had 424 proposals, between Snapshot voting (363 proposals) and Tally voting (61 proposals).

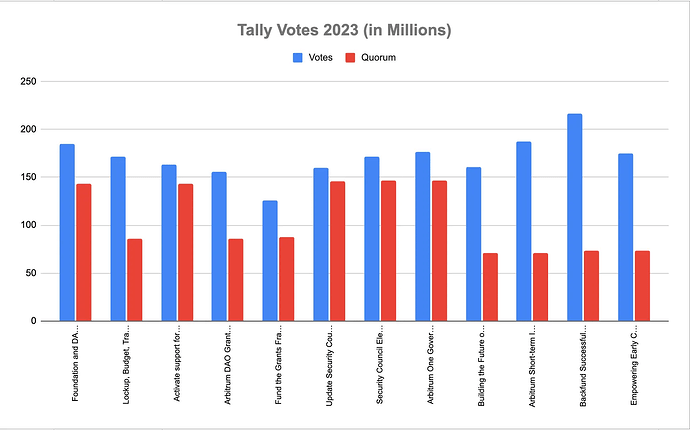

Tally Votes

Snapshot Votes

From the above graphs, we deduce the following:

- For off-chain votes (Snapshot):

- 2023, there were a total of 131 proposals, averaging 142.27M VP.

- 2024, there were a total of 212 proposals, averaging 150.61M VP (+5.86% YoY).

- 2025, there were a total of 20 proposals, averaging 162.12M VP (+7.64% YoY).

- For on-chain votes (Tally):

- 2023, there were a total of 12 proposals, averaging 170.65M VP.

- 2024, there were a total of 41 proposals, averaging 166.47M VP (-2.45% YoY).

- 2025, there were a total of 8 proposals, averaging 201.25M VP (+20.89% YoY).

When analyzing both the number of voters and the average Voting Power (VP) cast across proposals, we observe a clear trend: although the number of individual voters has been decreasing year over year, the amount of VP participating in each vote has increased. This is particularly evident in on-chain votes—while reaching 150M ARB used to be a challenge in early 2024, since Q4 2024 and throughout Q1 2025, participation has stabilized around the 200M ARB mark.

An important data point is that, when breaking down the 2024 voting activity into pre- and post-DIP periods, we observe the following:

The average VP per proposal in early 2024 was on a downward trend compared to 2023. However, following the implementation of the DIP, there was a clear recovery. By the end of 2024, with DIP 1.5 in place, participation levels even surpassed those of 2023. Below, we will analyze how much of this VP was directly contributed by DIP participants, although the causal relationship between the 2024 rise in average VP and the launch of the program is already evident.

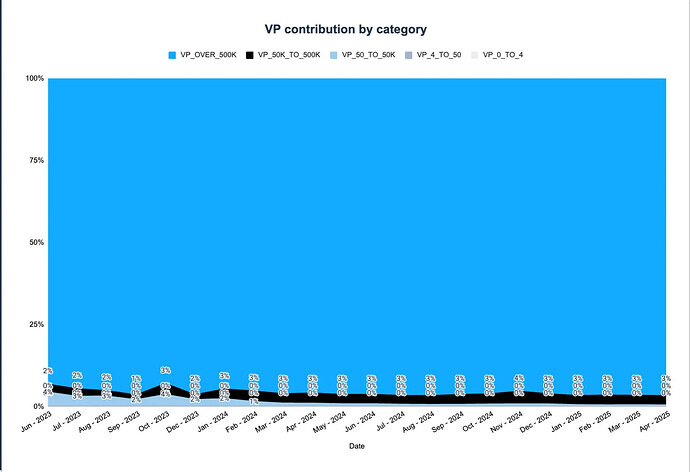

Voting Power Distribution

Now that we have analyzed both the number of voters and the average Voting Power (VP) participating in different votes, it’s time to understand how each voter contributes to the DAO’s goal of reaching quorum, and how the distribution of Voting Power has evolved over time.

For this analysis, we have classified voters into ranks based on their total VP. These ranges were selected because they reflect the natural clustering of delegates within each band:

- 0 - 4

- 4 - 50

- 50 - 5000

- 5000 - 50k

- 50k - 500k

- More than 500k

The following graphs show the percentage of voters by VP rank for each vote:

As previously mentioned, the total number of voters has decreased, and a significant portion of those who have stopped participating hold between 0 and 5,000 delegated ARB. It is evident that voter apathy disproportionately affects smaller holders, while larger delegates have maintained—or even increased—their relative participation in terms of total Voting Power. This trend can be clearly seen in the chart below:

When analyzing the Voting Power (VP) concentration by range, we observe that in during the first four months of 2025, the top tier consistently accounted for an average of 96.65% of the total VP, continuing the upward trend seen since the DAO’s inception.

This means that, on average, just 39 delegates held 96.65% of the VP (approximately 194.50M ARB) during the first few months of 2025. If we expand the scope to include the 61 delegates in the 50k–500k VP range who participated during the first four months, the total concentration rises to 99.52% (among a total of 100 delegates), meaning these 61 delegates collectively held only 2.87% of the VP (~5.77M ARB)—a figure that continues to gradually and slowly decline:

Quorum

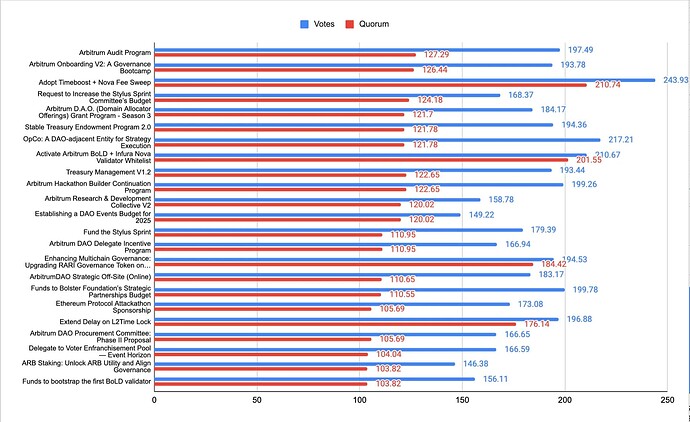

This chart shows all on-chain votes and their respective quorum requirements since the cutoff date of our last report (starting from September 2024):

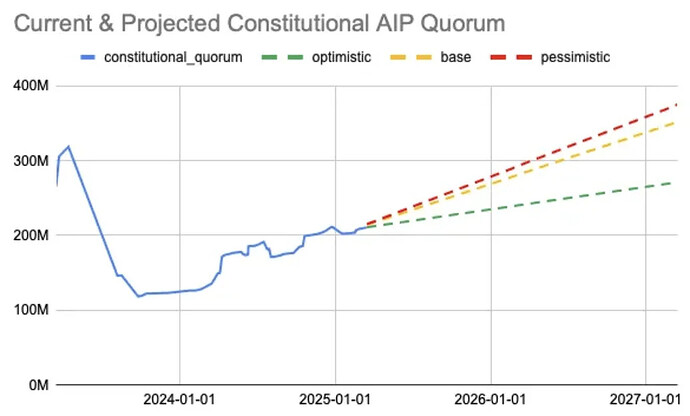

As with previous votes, most constitutional proposals have reached quorum by a very narrow margin. While the reduction of the quorum requirement from 5% to 4.5% may help proposals meet the threshold more comfortably in the short term, the long-term issue remains: the growing votable supply is not being converted into actively delegated and engaged Voting Power.

Now, if we combine this quorum analysis with the current distribution of VP among delegates, we arrive at an interesting conclusion:

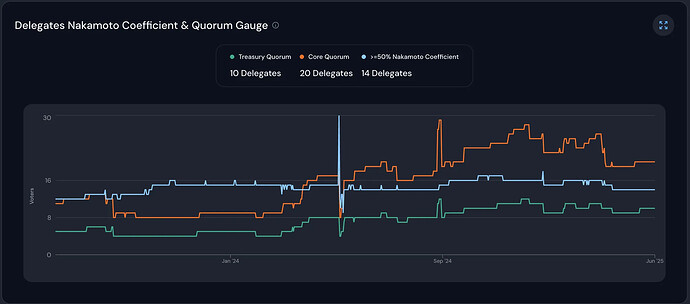

Source: Curia Dashboard

- The support or abstention of the Top 20 delegates is required to reach quorum on constitutional (Core) proposals.

- The support or abstention of the Top 10 delegates is required to reach quorum on non-constitutional (Treasury) proposals.

- A favorable vote from the Top 14 delegates is needed to reach the ≥50% approval threshold.

All of this includes delegates like LobbyFi, whose voting power could be “weaponized”—as their voting rights may be purchased—which has a significant impact on the analysis. It also includes several inactive or low-participation delegates within the Top 50. Therefore, it is critical to examine the historical Participation Rate (PR) of the Top 50 delegates and assess the potential implications of LobbyFi’s vote being strategically leveraged.

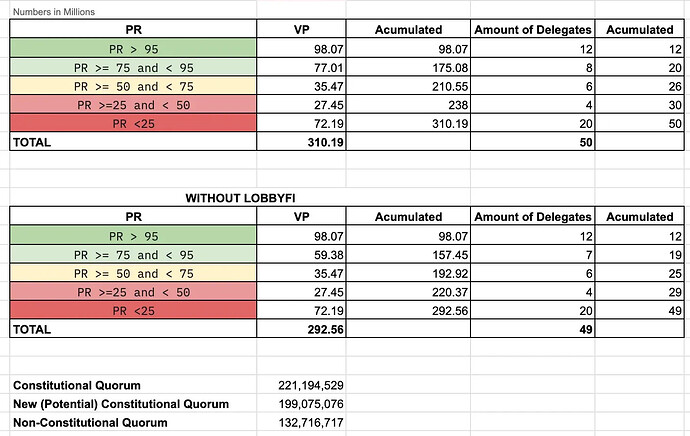

- PR ≥ 95% = 98.07m ARB (12 delegates)

- PR ≥ 75% and <95% = 77.01m ARB (8 delegates, including LobbyFi)

- PR ≥ 50% and < 75% = 35.47m ARB (6 delegates)

- PR ≥ 25% and < 50% = 27.45m ARB (4 delegates)

- PR < 25% = 72.19m ARB (20 delegates)

As for the constitutional quorum, with the potential reduction to 4.5% of the votable supply (199,075,076 instead of 221,194,529 as of June 9, 2025), ensuring participation from delegates with a historical Participation Rate (PR) between 50% and 100% should be enough—if we include LobbyFi. This means that under the proposed reduction, the DAO would need at least the 26 delegates from the Top 50 with a PR ≥ 50%.

Eventually, this will no longer be sufficient, and participation from lower-performing delegates will also be required—as is already the case today. Even assuming a FOR or ABSTAIN vote from LobbyFi, Arbitrum DAO still needs approximately 11M ARB in voting power from 24 delegates with PR < 50% to reach the constitutional quorum. This is not a minor detail: one of the reasons the DAO is currently struggling to meet the constitutional quorum is that it relies heavily on low-activity actors to do so.

Again, these figures include LobbyFi’s 17.63M ARB. While it’s generally expected that constitutional votes result in a FOR or ABSTAIN from this VP block, this cannot be taken for granted. A malicious actor could theoretically purchase a NO vote, preventing those tokens from counting toward quorum in a major protocol upgrade.

If we exclude LobbyFi, the DAO would require at least 27 out of the 29 delegates with a historical PR ≥ 25%, or alternatively, ≈6M additional ARB from delegates with PR < 50%. This number is likely to increase over time, according to constitutional quorum projections published by the ARDC, meaning the DAO’s reliance on low-activity delegates will grow.

These figures indicate that maintaining a strong incentive for voting activity will be critical to activating as much Voting Power as possible in light of the DAO’s growing quorum requirements. As such, the program must strike a balance between spending efficiency (i.e., ARB activated per dollar spent) and offering meaningful incentives to ensure that large voting power holders remain consistently active.

DIP Contributions to Quorum Achievement

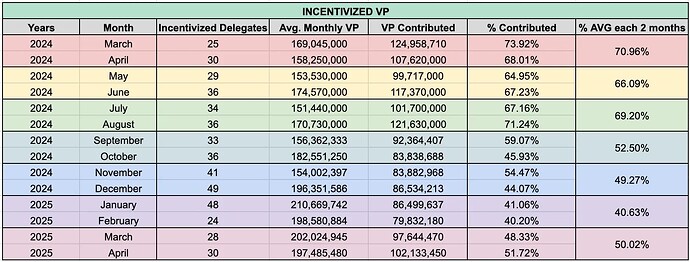

We analyzed the Voting Power incentivized by the DIP relative to the Average Monthly VP of on-chain votes (extending the analysis from the previous iteration, this time using two-month ranges for a homogeneous period comparison) and found that despite the average monthly VP in on-chain voting increasing, the DIP has not kept pace with this growth.

This likely reflects that high-VP delegates (e.g., Entropy) have started participating in votes without being part of the program.

There is also a noticeable decline in the VP incentivized by the program between September 2024 and February 2025, due to two main factors:

- Some high-VP delegates previously in the program (e.g., Treasure DAO) have ceased participation in the DAO.

- Other delegates with considerable VP remain in the program but have experienced sustained losses in delegated Voting Power recently, as seen clearly with delegates like L2Beat or Olimpio.

However, during the most recent two-month period, much of this decline was recovered by onboarding delegations with considerable VP (GMX, Camelot), and we expect this trend to continue as we implement changes that reduce friction and requirements for high-VP delegates to participate in the program.

It is important to note that the Voting Power incentivized in May 2025 was 111,308,290 ARB.

Economic Analysis of Distributed Incentives

Having understood the amount of Voting Power the DIP has incentivized over this recent period, it is time to analyze how incentives have been allocated between voting activities and other contributions.

Over the last 6 months of the program, the DIP paid monthly incentives to a maximum of 49 delegates:

- November 2024: $225,802.60 (41 delegates)

- December 2024: $263,230.75 (49 delegates)

- January 2025: $202,366.36 (48 delegates)

- February 2025: $90,330.58 (24 delegates)

- March 2025: $111,221.89 (28 delegates)

- April 2025: $125,357.89 (30 delegates)

- 6-month average: $169,718.35

- Average first 3 months: $231,609.24

- Average last 3 months: $108,587.45

Note that changes made in February contributed to a significant reduction in both incentives paid and the number of delegates incentivized.

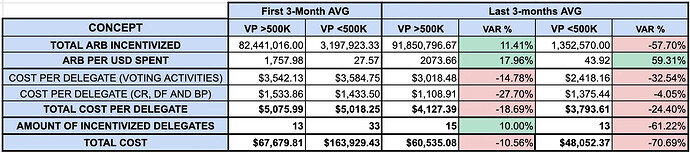

Now, we turn to understand to whom and for which activities these funds were allocated:

Initially, 70.10% of incentives were assigned to voting activities (including Participation Rate, Snapshot Votes, and Tally Votes), while the remaining 29.90% were allocated to other activities (Communication Rationales, Delegates’ Feedback, and Bonus Points).

This partially answers which activities the funds targeted. To better understand who received them, we disaggregated the analysis into two main groups:

- Range A: Delegates with VP > 500K ARB during the analyzed month

- Range B: Delegates with VP < 500K ARB during the analyzed month

This segregation allows for a more precise evaluation of key metrics concerning both voting activities and other contributions:

- TOTAL ARB INCENTIVIZED: How much Voting Power did the DAO maintain incentivized?

- ARB PER USD SPENT: How much Voting Power did the DAO obtain per dollar spent? This metric is crucial as it measures the efficiency of incentives aimed at ensuring delegates vote across proposals.

- COST PER DELEGATE FOR VOTING ACTIVITIES: Average incentive paid per delegate in the range for voting activities.

- COST PER DELEGATE FOR OTHER ACTIVITIES: Average incentive paid per delegate in the range for other activities (Communication Rationales, Delegates’ Feedback, Bonus Points).

- TOTAL COST PER DELEGATE: Average total incentive paid per delegate in the range.

- AMOUNT OF INCENTIVIZED DELEGATES: Number of delegates in the range who were incentivized.

- TOTAL COST: Total cost of incentivizing each delegate range.

Upon conducting this analysis, we found the following:

This chart reveals drastic variations in most variables between the first and last three months of the program, largely due to the updates implemented since February 2025:

- The amount of incentivized Voting Power in Range A (>500K ARB) has improved, as well as the ARB Per USD Spent ratio for both ranges. Although fewer delegates in Range B (<500K ARB) are being incentivized, the efficiency per dollar spent has increased significantly. This reflects the implementation of the Voting Power Multiplier and a reduced number of incentivized delegates in Range B compared to the previous quarter.

- The number of incentivized delegates in Range B has drastically decreased, while the opposite trend is seen in Range A, reversing the dominance in total incentives.

- Incentives per delegate have dropped considerably across all ranges and activity categories.

- During the first quarter, the program allocated funds almost equally between voting activities across delegates regardless of their VP. However, in the second quarter this reversed, with delegates in Range A receiving higher per-delegate incentives than those in Range B. This also reflects the Voting Power Multiplier’s effect.

- Total incentives during the first quarter for Range B (and consequently for voting activities) clearly exceeded those for Range A. After the program changes in the second quarter, more funds are generally allocated to delegates in Range A, except for incentives related to other activities (Communication Rationales, Delegates’ Feedback, and Bonus Points), where smaller delegates tend to contribute more regularly.

- The difference in the ARB Per USD Spent ratio between Range A and Range B is striking, indicating that incentivizing Range A is currently 47 times more efficient than incentivizing Range B. This is a key insight into the cost-effectiveness of incentivizing voting activities for smaller delegates. While early in the program this was a deliberate investment aimed at attracting talent and fostering pluralism and human resources within the DAO, naturally this “investment” should decrease over time as these smaller contributors are effectively onboarded into full-time DAO roles (as happened with Pedrob).

Delegates’ Feedback and Bonus Points Distribution

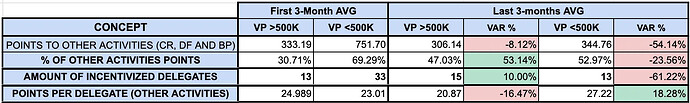

Initially, we analyzed the distribution of points according to the range to which the delegates belong:

Concepts:

- Points to Other Activities (CR, DF, and BP): Represents the quarterly average points earned by each range for activities other than voting (Communication Rationales, Delegates’ Feedback, and Bonus Points).

- Amount of Incentivized Delegates: Number of delegates within the range who were incentivized.

- Points Per Delegate (Other Activities): Represents the quarterly average points from other activities, earned on average per delegate within a specific range.

In this analysis, it is noticeable that although the dominance of Range B (VP ≤ 500K ARB) has declined due to fewer incentivized delegates in the last quarter, they generally maintain a significant participation in the other DAO activities. This means that, while incentivizing their voting may be inefficient (as previously observed), they remain a valuable part of the community who tend to make meaningful contributions—especially regarding Bonus Points, where they have earned an average of 69.10% of points awarded over the last three months.

It is clear that the DIP currently incentivizes two distinct groups of activities and delegate ranges, each generating greater or lesser impact with varying levels of incentives:

- Voting activity incentives have proven to be much more efficient in Range A (delegates with VP ≥ 500,000 ARB).

- Incentives for Other Activities (Communication Rationales, Delegates’ Feedback, and Bonus Points) for Range B delegates show that there is a group of small delegates who tend to be active contributors to the DAO.

The current framework does not allow us to maximize efficiency in distributing voting incentives without “leaving out” smaller delegates who, although they do not significantly impact quorum attainment, potentially provide considerable value through their contributions to DAO discussions.

Disputes and Subjectivity in the Delegates’ Feedback Parameter

During the first six months of the program, the subjective analysis of delegates’ comments throughout the month was put to the test. SEEDGov’s team has had to analyze all activity on the forum, across various discussions (proposals or otherwise), marking each comment as valid or invalid and assigning a score to each valid comment to obtain an average final score per delegate.

It is worth recalling that prior to DIP 1.5, only comments from delegates on proposals that went to a vote were included, with no quality analysis beyond a soft pre-filtering to exclude AI-generated or spam comments.

Clearly, this proposal was an experiment in which the DAO had to place a great deal of trust in SEEDGov as Program Manager. Likewise, SEEDGov assumed a challenging (but necessary, from our perspective) role in subjectively evaluating the contributions of each delegate/contributor—not only to make the program more resistant to farming but also to help delegates improve the quality of their contributions by providing individual feedback.

Over time, both the framework and our criteria have evolved. As some requirements became stricter with program updates, and as we as Program Managers sought to raise the bar for delegates’ contributions, we encountered frictions that were less present in the program’s early months. Some delegates disagreed multiple times with our applied criteria on subjective parameters, while others requested clarifications and later accepted them.

Regarding these frictions, we reviewed disputes from November 2024 to April 2025 (exclusively related to subjective parameters like Delegates’ Feedback or Bonus Points) and found the following:

- November: 0

- December: 5

- January: 7

- February: 7

- March: 7

- April: 9

These figures represent delegates disputing their subjective assessment in the program. On several occasions, we have spent multiple days responding to these disputes— a time and energy-consuming task for both Program Managers and Delegates. Time which we believe could be better allocated to important DAO discussions.

Additionally, disputes over subjective parameters generate noise and conflicts within the community.

That said, several disputes have also provided valuable feedback that helped us improve and evolve our criteria, so we see some merit in this dialogue between delegates and the Program Managers.

Points to consider for improving this parameter include:

- Providing greater clarity and reducing subjectivity so that delegates have a clearer understanding of which contributions will be considered, thereby reducing the need to dispute these parameters.

- Ensuring that points awarded for Delegates’ Feedback faithfully reflect the contributors’ impact on DAO decision-making, a difficult factor to determine clearly in recent months.

- Reducing reliance on Program Manager discretion by involving more people/entities in the assessment process, thereby making evaluations more robust and consensual.

Sources of the Metrics:

SEEDGov’s Dune (Note that much of this is still a work in progress, changes might be made after publishing of this report.)

Entropy’s Dune

Curia Dashboard

DIP 1.5 - 1.6 MID Term Report Miscelaneous

Additional metrics and graphics in the report

2. SECURITY COUNCIL ELECTIONS - USING THE DIP AS A TOOL

2. Security Council Elections - Using the DIP as a tool

2. Security Council Elections - Using the DIP as a tool

Starting with the April 2025 elections, voting in the Security Council elections became a mandatory requirement for delegates seeking DIP incentives. This update highlighted the importance of constitutional processes and reinforced the responsibility of delegates in electing members of ArbitrumDAO’s most critical multisig.

Election Timeline & Incentive Eligibility

- The Member Election phase ran from April 12 to May 3 (21 days).

- Delegates were required to vote during this phase to qualify for April and May DIP rewards.

- Voting after day 7 triggered a penalty, reducing the number of points earned.

- Non-participation or failure to publicly justify an abstention led to disqualification from incentives for that month.

Penalty Structure for Late Voting

To encourage early engagement, a linear point decay system was implemented:

- Days 1–7: No penalty applied.

- Days 8–21: A daily penalty of 0.3572 points was deducted from the delegate’s Total Points (TP), up to a maximum of 5 points.

- Example: A vote cast on Day 14 resulted in a 2.5-point deduction.

This penalty was tracked separately from the Voting Participation Score through a dedicated module in the Karma dashboard.

TL;DR – Results & Key Takeaways

(from SEEDGov’s X thread)

This was the highest-participation Security Council election to date in total Voting Power casted during the first 7 days, where the penalty-free window applied.

This was the highest-participation Security Council election to date in total Voting Power casted during the first 7 days, where the penalty-free window applied. The alignment between the DIP framework and DAO-wide priorities proved highly effective, successfully incentivizing early and meaningful delegate participation.

The alignment between the DIP framework and DAO-wide priorities proved highly effective, successfully incentivizing early and meaningful delegate participation. Voter participation increased from 55.74% in Election #3 (source: Dune Analytics) to 63.47%, representing over 50 million additional ARB compared to the previous election.

Voter participation increased from 55.74% in Election #3 (source: Dune Analytics) to 63.47%, representing over 50 million additional ARB compared to the previous election. Only 9 delegates were disqualified from DIP rewards in April due to non-voting or lack of public abstention.

Only 9 delegates were disqualified from DIP rewards in April due to non-voting or lack of public abstention. The average penalty was just 0.86 points, indicating most delegates voted within the expected timeframe.

The average penalty was just 0.86 points, indicating most delegates voted within the expected timeframe.

4. DELEGATE FEEDBACK REPORTS: DOCUMENTING SUBJECTIVE EVALUATION

4. Delegate Feedback Reports: Documenting Subjective Evaluation

4. Delegate Feedback Reports: Documenting Subjective Evaluation

As part of our role as Program Managers, SEEDGov has published a series of Delegate Feedback Reports (202 Individual Reports between February and April, 271 if we add May) over the past four months. These reports were designed to:

- Bring transparency and consistency to the evaluation of one of the program’s most nuanced and debated parameters: Delegates’ Feedback (DF).

- Provide feedback to delegates to help them improve their contributions or identify specific verticals or topics where they can have a greater impact.

Each monthly report presented an aggregated view of the rationale behind individual scores, described the assessment criteria applied, and explained any relevant methodological changes that had occurred. The intent was twofold: first, to foster continuous improvement by sharing learnings and edge cases; and second, to build trust with the community by clearly articulating the principles guiding the subjective evaluation process.

While the DF Reports were not intended to serve as definitive audits, they played a valuable role in mentoring delegates, documenting precedents, clarifying expectations, and offering visibility into how subjectivity was managed. Over time, they became a space to iterate publicly on our standards—acknowledging gray areas, detailing score justifications, and reflecting on delegate feedback when it contributed to refining our approach.

We would also like to highlight that, based on the reports—particularly those with a high level of granularity—we have observed improvements in certain delegates. Some have even managed to qualify for incentives after several months of unsuccessful attempts. This demonstrates that, while it is a complex and resource-intensive task, focusing efforts on cases where potential is identified can yield very positive results.

Importantly, the reports also highlighted recurring frictions, including:

- The blurred line between meaningful contributions and minimal engagement.

- Tensions between rewarding effort versus rewarding impact.

- Challenges in standardizing criteria across different forum dynamics and proposal types.

These insights, when read alongside the analysis in Section 5 (Disputes and the Subjectivity of DF), illustrate the complexity of operationalizing qualitative evaluations within an incentive framework. While the DF Reports did not resolve all open questions, they helped move the program—and its community—toward a more reflexive and accountable standard of assessment.

Looking ahead, we believe these learnings should inform any evolution of the Delegates’ Feedback parameter, particularly in discussions surrounding DIP v1.7 and v2.0. Whether through stricter definitions, clearer thresholds, or the inclusion of additional evaluators, the program must continue to evolve in ways that maintain fairness while reducing operational burden and ambiguity.

5. PROGRAM UPDATES

5. Program Updates

5. Program Updates

All changes and refinements to the DIP were posted in the DIP v1.60 Updates Thread. Below is a breakdown of each version and the rationale behind the updates:

● V1.51 – December 30, 2024

This update focused on improving scoring granularity and strengthening incentives through two core changes:

-

Revised Tier System

- Tier 1: TP ≥ 90% (previously 85%), Compensation: $5,950–$7,000, ARB cap: 16,500.

- Tier 2: TP ≥ 75% and < 90% (previously 70%–85%), Compensation: $4,200–$5,100, ARB cap: 12,000.

- Tier 3: TP ≥ 65% and < 75% (previously 65%–70%), Compensation: $3,000–$3,250, ARB cap: 8,000.

Rationale: Tier 1 was narrowed to better reflect top performance, while the ranges were normalized to make tiers more balanced (Tier 1 and 3 both span 10%; Tier 2 spans 15%).

-

Bonus Points for Call Attendance

- 1.25% bonus points for each Governance Report Call (GRC) attended.

- 1.25% bonus points for each bi-weekly call attended.

Rationale: The monthly Bonus Points cap was set at 5% to maintain balance between rewarding participation and avoiding inflation. With the switch to two GRCs per month, these adjustments aligned incentives with the program’s communication goals.

These changes took effect on January 1, 2025.

● V1.51a – January 20, 2025

This update addressed issues in the feedback scoring system for governance proposal participation:

-

Delegates’ Feedback Adjustment

- Proposal threads with fewer than 5 comments during the evaluated month are excluded from the denominator used for the ‘Delegates Feedback’ multiplier.

- Delegate contributions to these low-activity threads are still counted individually, but such threads do not negatively impact the participation rate of others.

Rationale:

Some proposals received minimal engagement (less than five comments), yet were still being counted in the total number of proposals for scoring purposes. This unfairly reduced the participation score for many delegates who had not engaged with these low-activity threads.

The adjustment ensures delegate feedback is recognized where it exists, while preventing disproportionate penalization for lack of engagement in under-discussed proposals.

This change was applied retroactively to January 1, 2025.

● V1.6 - February 12th, 2025

This update addressed issues in the Communication Rationale and Delegates’ Feedback scoring system for governance discussions participation:

Communication Rationale Merged into Delegates’ Feedback

The Communication Rationale (CR) parameter was removed and its points merged into the Delegates’ Feedback (DF) category, increasing the DF cap to 40 points.

This change was based on the observation that most CRs added limited value and were often redundant or borderline DF. Since DIP-1.5 already enabled subjective DF evaluation, consolidating both under one rubric helped reduce redundancy and focus rewards on valuable contributions—regardless of whether they were posted as a rationale or as general feedback.

Voting Score Multiplier Based on Voting Power

A multiplier was introduced to adjust the Voting Score based on each delegate’s Voting Power (VP):

- Minimum multiplier (0.8) for delegates with ≥50,000 VP

- Maximum multiplier (1.0) for delegates with ≥4M VP

- Linear scaling between these two points

- Formula:

Multiplier = 0.00000005063 × VP + 0.7974685

Only the Voting Score is affected; DF and Bonus Points remain unchanged. This adjustment recognized that delegates with higher VP carry greater voting responsibility, while still allowing smaller delegates to earn compensation through active forum participation.

Monthly averages were used to account for VP changes.

Minor Adjustment to DF Scoring Rubric

The individual comment scale within the DF rubric was updated from 0–4 to 0–10, offering greater flexibility to reflect the varying quality of delegate input.

6. MISCELLANEOUS

6. Miscellaneous

6. Miscellaneous

● 6.1 Delegate Manual (“The Bible”)

The Delegate Manual outlines the rules, expectations, and procedures that govern the DIP. It’s the core reference point for understanding how the program operates.

These manuals ensure all participants are on the same page and can refer back to rules as they evolve.

● 6.2 Accountability

Transparency was reinforced via the Accountability Thread, which included:

- A public rewards tracking spreadsheet,

- Detailed logs of scoring, payments, and others,

- Historical notes on the transition from v1.0 to v1.5 and to v1.6,

- A reference to the payment distribution thread.

7. CONCLUSIONS

7. Conclusions

7. Conclusions

Strengths

- The program remains essential for the DAO’s objective of reaching quorum. As shown in the metrics, contributions to quorum continue to be significant and have been increasing in recent months, gradually recovering the dominance over the total quorum that it had last year.

- The program has effectively served as a method to attract and retain talent, with several participating delegates being elected to hold positions within the DAO or hired by Arbitrum-Aligned Entities (AAEs). This is vital since the DAO currently lacks any other framework to support small contributors.

- The program has successfully promoted diversity of voices, which is crucial for the DAO’s decision-making process. Although the low threshold of 50K ARB introduced some inefficiencies, it also enabled the inclusion of new contributors who otherwise would not have been able to join the community.

- Alongside this, the program is beginning to shape itself as an onboarding and mentorship tool for new contributors. We have held meetings with several applicants to guide them on how they can best collaborate with the DAO. In this regard, we have encouraged applicants to focus their efforts on their areas of expertise, so that human resources are used as efficiently as possible, and ultimately contributors make meaningful contributions supported by relevant backgrounds. These meetings complement the individual reporting for each delegate, and on several occasions we have highlighted the verticals where a contributor particularly excels.

- The program has proven useful as a tool for specific situations, such as the recent Security Council elections, where proactive management in collaboration with the Arbitrum Foundation significantly improved election metrics compared to past performance.

- SEEDGov as Program Manager has incorporated feedback from various stakeholders, introducing changes in the scoring system that have made the program more efficient and resistant to farming, without requiring lengthy governance processes. This discretion has worked well, as the changes achieved their goals with minimal friction. We believe this aligns strongly with the DAO’s current context, which prioritizes efficiency and opts to centralize certain processes that the DAO has struggled to resolve easily without lengthy debates over minor details.

Areas for Improvement – Lessons Learned

In our conclusions, we want to outline a series of lessons and areas for improvement that we intend to address with changes in version 1.7. While not all issues will be resolved in this update (some structural matters, such as having a single framework for two different types of contributors, will be addressed in version 2.0), we believe the proposed changes will tackle a number of the improvements identified in this report:

-

Friction for high VP delegates: We noticed that some delegates either choose not to enroll in the program because of additional activity requirements to qualify or are sometimes ineligible in a given month despite voting on everything due to lack of additional forum contributions.

-

Lower DAO activity: Both Tally and Snapshot voting have shown a downward trend compared to previous years, which is likely to continue under the new organizational structure of the DAO. The program’s compensation scheme will need adjustment to maximize efficiency without discouraging participation.

-

Subjectivity in the Delegates’ Feedback parameter: This has been a sensitive issue month-to-month, causing friction between the Program Manager and delegates, as well as generating noise in several occasions. We believe measures should be taken to reduce reliance on the PM’s discretion, provide greater certainty about which contributions will be rewarded, and clarify the real impact these contributions have on the DAO’s day-to-day operations. We aim for this to also resolve the ongoing disputes over subjective parameters observed in recent months.

-

Inefficiency in incentivizing small delegates’ voting: As mentioned earlier, incentivizing votes from delegates in the 50,000–500,000 ARB range has proven extremely inefficient compared to incentivizing those with ≥500,000 ARB. While this won’t be fully resolved in this iteration, we can at least mitigate its future impact until a superior solution is implemented in the next program.

-

Intra-tier fairness: Although not covered in the report, we have noticed (and received feedback) that when the ARB cap comes into effect due to adverse token price action, delegates within the same Tier receive equal compensation regardless of their scoring. This partially affects the program’s meritocratic nature, so we will propose changes to fix this issue.

-

Vote Buying Platforms: It has become clear that the DAO needs to align these platforms with its own goals. Voting Power from actors like LobbyFi is significant enough that ignoring the issue is not an option. Although the DAO has attempted to address this topic before, no concrete proposal has been made. We believe there are ways to align these platforms through the DIP so we can at least ensure their Voting Power supports the DAO’s constitutional quorum objectives.

-

One program for two distinct types of delegates and contributors: Something we seek to solve in the future is the existence of a single framework for two distinct types of contributors or, more precisely, to serve two different needs:

-

On one hand, the DAO has delegates with high VP that we want to keep active voting to meet quorum goals. Many of these delegates are not interested in or don’t have time to engage deeply in day-to-day governance, so conditioning their incentives on other activities creates a barrier to participation. This friction has led some delegates to avoid enrolling or struggle to reach the 65 Total Participation Points threshold if they haven’t contributed in the forum or other areas beyond voting.

-

On the other hand, the DAO has small delegates for whom incentivizing voting is inefficient, but who have shown they can make a significant impact via off-chain contributions. Under a separate framework, these delegates would not need to hold a minimum amount of Voting Power to have their off-chain contributions rewarded. This also applies to contributors with zero VP, who currently cannot participate in the program and thus have their contributions overlooked.

These “small delegates” or “Contributors” recognized under this framework should eventually build the reputation needed to earn a full-time position in the DAO — something that has happened to several small DIP delegates over time — albeit at the cost of having to invest in incentives for voting activities on low-VP delegates forced to reach a certain threshold to participate.

We believe the DIP should be restructured in the future to modularly incentivize different types of contributions without dependency on each other, maximizing budget allocation efficiency.

-

Next Steps

We have been working in parallel with this report on an RFC containing proposed changes to kick off version 1.7 of the program.

We expect to publish it within the next few days.

We warmly invite the entire community to provide their feedback on this report and the future of the DIP.

Best regards!