Table of Contents

- Key Learning & Takeaways

- Our Deliverables After Three Milestones

- How to Review Milestone 1

- Deliverables from AIP-3

- Grant Program Selections

We have two ongoing projects expected to be delivered in January which will make it very simple for anyone to look up and review individual grants across all the programs. For this reason, we didn’t include the details of individual grants here as the document is already 30 pages long. ![]()

Key Learnings & Takeaways

DAO Needs

-

The Thank ARB strategic priorities found during #GovMonth did not receive support from top delegates because we did not include them in the process in a way that was seen as legitimate. \

-

Without legitimate priorities and a process for setting 3-6 month strategies it is hard for the DAO to enable more experimentation.

-

Potential contributors need a pathway to get a small grant to do narrow scoped work which then is assessed to provide next steps for the contributor to know there is opportunity and also for the DAO to double down on high performers.

-

DAO members need communications to be simplified and aggregated. They don’t know where to go to maintain context, know what they should do, and learn about other ways to be a good Arbitrum citizen.

-

The DAO needs workstreams ASAP because we are lacking people who organize and contextualize situations.

-

The decentralized nature of Arbitrum means that things move slow. We are in a position to help move things faster.

Communications

-

We need to segment by personas (delegate/builder/grantee/etc) and match our channels to the user. Many people do not know what we have done.

-

We need to double down on USER CENTERED DESIGN on the Thank ARB platform

-

13 Grant Programs makes for too much complexity. It would be better to have a few focused yet workstreams.

-

The community is willing and capable. They just don’t know what to do.

-

Common delegate feedback: “I like the energy Plurality Labs brings. You have been a spark that brought us from 0 to 1. However, I don’t exactly know what you’ve done.”

Experimentation

-

The strategic priorities from GovMonth did not achieve legitimacy. Had we involved delegates earlier in the process, they would have understood what we did and likely would have seen the results as legitimate even without ratifying them.

-

Quadratic funding is likely not a good meta-allocation mechanism. We are going to try again with Quadratic Voting and another custom algorithm.

-

Successful tools usually solve more than one problem. Hats protocol provides many solutions from workstream accountability to dynamic councils. Hedgey streams are easy to use and can work for grants as well as salaries. When we find solutions, we should double down to see what other ways we can use them.

-

The DAO needs more indexed data available for the community to interpret. Overall, the DAO does not have any agreed upon metrics to say what success looks like.

Funding Success

-

Huge amount of learning happened with the foundation (Compliance, Process, Etc). Some funding didn’t happen until months after the grant approval. We are now down to 2-3 weeks to get funding sent from when the grant is approved, but we need better systems.

-

We need to balance the desire for clear criteria with decision making that has common sense (NFT Earth removal from grant eligibility). As we make decisions, we are observing and documenting criteria to open up the process in the future, but confirming community led review capabilities is a priority before removing decision making power. This applies at the framework level as multiple programs are attempting to draft and use criteria based approaches.

-

Firestarters served a clear purpose and was our most successful program as far as we can tell. It drove tangible results for the DAO in short time. Now we need next steps.

-

Data driven funding is critical in milestone 2 (STIP support, OSO, etc)

Our Goals After Three Milestones

Different from most grant programs, Plurality Labs is not only responsible for allocating grant funds. AIP-3 also directs us to spearhead the design of a pluralist & capture-resistant grants governance framework. This means that over the course of three milestones we are committed to, and accountable for, the work to design the framework, the ultimate deliverables of the three milestone effort, and the metrics that signal our success.

Let’s break that down for a quick reminder. Our team sees this daily:

These metrics will be baselined in January and visited twice a year from here forward. We are sharing this here as a reminder of where we are going and to bring intention to how you review our progress towards the overall goals after 3 Milestones vs the work done in Milestone 1.

How to Review Milestone 1

Milestone 1 can be evaluated using the following 3 dimensions:

Did they do what they said they would do in Milestone 1?

In Milestone 1, the scope of our efforts may have been downplayed in our initial proposal. The substantial work required for the initial setup, including coordination with the foundation for compliance and fostering effective collaboration, was not adequately detailed in AIP-3.

Nevertheless, a comprehensive overview of our commitments and deliverables for Milestone 1 can be found in the section labeled “Deliverables from AIP-3”.

Did grant funding go to worthwhile programs & projects?

Plurality Labs predominantly opted for external program managers to oversee grant programs aimed at achieving diverse objectives. While some programs were internally designed by Plurality Labs and then assigned a program manager, others emerged through an open application process. Notably, GovBoost and Firestarters were overseen by Disruption Joe as the program manager, aligning with their tailored focus on addressing specific needs of the DAO.

For a comprehensive overview of all program selections, please refer to the section titled “Grant Program Selections”.

Higher up indicates alignment to funding DAO needs. To the right indicates a focus on experimentation. Circle size indicates the amount of funding given to the program.

Did they prioritize experimentation & learning for the future?

Within each grant program, varying levels of experimentation exist. Those depicted on the right side of the bubble chart above are particularly high in experimentation. In these instances, the allocation of grant funding is not only a financial endeavor but also a means of acquiring insights into new and effective mechanisms and processes. These insights contribute to a comprehensive and holistic approach to capture-resistant governance frameworks.

If successful, our grants program has the potential to endure beyond Plurality Labs’ active involvement. Furthermore, the knowledge gained in developing capture-resistant governance within the grants framework can be extended to governance related to on-chain upgradeability, enhancing the security and value of Arbitrum. Additionally, it lays the groundwork for a future characterized by genuinely neutral digital public infrastructure.

Detailed insights into these learnings are provided under each grant program in the section titled “Grant Program Selections” below.

Deliverables from AIP - 3

13 of 20 (65%) have been completed

Our biggest deliverable has been allocating 3 million ARB in grants while building the processes to do so. Our strategic framework was built in a DAO native way and has been widely seen as a new innovation in how DAOs can function. We had plenty of takeaways and learnings which are detailed below.

We took a lot of shots and while not all of them landed, we feel most were quality attempts to make progress. Even though every experiment doesn’t provide the insights we hope for, we feel that an objective review of what was funded will show that Plurality Labs grants framework allocated funding as well as any other grants program out while running the experiments in tandem.

5 of 20 (25%) will be completed by the end of milestone which ends on 1/31

- Establish and confirm key success metrics for the Grants Program

- Establish clear communications cadences & channels for all key stakeholders to engage with the program

- Design approach, process and channels for sourcing high impact grants ideas

- Design Grant Program manager application process and assessment criteria

- Onboard and coach Pluralist Program Managers in grant program best practices

2 of 20 (10%) will not be completed

The fulfillment of these programs hinges on reviewing the success of individual grants. Due to the timeline of distribution, it was not possible to complete grant success review prior to grant program review during this milestone. The following items were replaced during this milestone and are on the roadmap for Milestone 2.

- Collate community feedback and input on grants programs efficacy and success

- Evaluate, review and iterate based on this feedback to continually improve the overall impact of the Arbitrum DAO grants program

Because of this timing, we decided not to evaluate the grant program’s success and instead use January to review every grant that was funded, beginning a continuous process of community led evaluation. In hindsight, this was probably needed before evaluating at the program level anyway.

Grant Program Selections

Each section below is a grant program. Programs are listed in chronological order by start date. Within each program you can review the following:

- Program Description

- Why the Program was selected

- Alignment to Thank ARB Strategic Priorities

- Program manager

- Amount funded

- Types of projects funded

- Expected outcomes

- Experiments conducted

- Learnings (if program has concluded)

Questbook Support Rounds on Gitcoin

Program Manager: Zer8

Allocation Amounts: 100K ARB

Status: Completed & Paid

Description

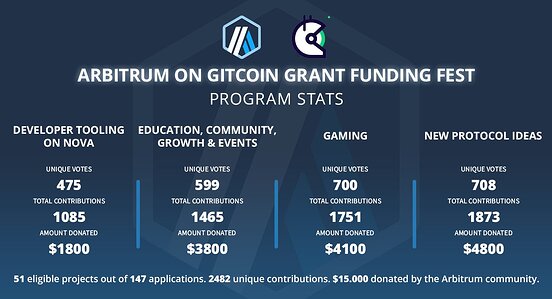

This program started with two unique governance experiments. A “domain allocation” governance experiment allowed the community to direct funds to four matching pools based on Questbook program domains. Then 4 quadratic funding rounds sourced grants to assist their domain allocators in sourcing grants.

Why PL Funded This Program

We wanted to start quickly getting ARB into the hands of builders. Questbook had been asked “What would be the top reason your program might fail?” and they answered that sourcing enough quality grants is their top potential problem.

At the same time, our proposal had introduced the concept of horizontally scaling a pluralist grants program. This program was a great opportunity to show how multiple grants programs could collaborate to provide better outcomes. Quadratic Funding is great for sourcing new grants. Delegated Domain Authority, the system used by Questbook, is better for quickly doubling down on quality performance.

This program was funded to support and bring awareness to Questbook’s grant programs and as a governance experiment in funding distribution.

Alignment to Thank ARB Strategic Priorities !(upload://7UravR3RZXsGS3oattrRl4frlwz.png “Chart”)

The types of projects include…

- Any projects eligible Questbook.app

The outcomes we hope to achieve are…

- Support initiatives on Arbitrum.

- Find high-quality grants that might get additional funding from Questbook if the grant is successful.

- Minimize the risk of choosing grants that might fail

The program will support experiments to see if…

- Decentralized meta allocation improves how the community decides to allocate funds between matching pools using quadratic funding.

- Allow-list restricted voting in quadratic funding is userful

- Collaborative efforts between different programs have positive effects.

Thank ARB (Sense and Respond)

Program Manager: Payton / Martin

Allocation Amounts: 350K ARB

Status: ⅓ modules completed - Ends early February

Description

This program introduces a novel way for the community to connect with the DAO. It provides a foundation for the community to continually sense and respond to the fast changing crypto environment. It provides a place for DAO members to learn what is happening, voice their opinions, and learn about opportunities to offer their skills.

Why PL Funded This Program

This program was selected to ensure an engaged and informed community is ready to mobilize to solve Arbitrum’s biggest challenges. The most powerful advantage a DAO has is its community.

To this day, DAOs have not solved communications in a decentralized way. Thank ARB creates a conversation by filtering signal from community input to identify expertise and effort from community members and offers them opportunities to engage as Arbitrum citizens.

Thank ARB is also used to reach either statistical significance in surveys or minimum viable decentralization in curation. This allows Arbitrum to refrain from delegated authority for critical functions by using swarm mechanics to resolve conflicts in curation.

DAOs are complex by nature, thus they require complexity aware solutions based on a continuous practice of sensing and responding in order to grow.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- Rewarding community members actively participating and adding value.

- Inviting stakeholders, delegates and Arbinauts to join activities that help make sense of our priorities and contribute to governance processes, like grant evaluation.

The outcomes we hope to achieve are…

- Improve how grant funding is distributed.

- Increase delegate and community confidence that funds are allocated as intended based on a collaborative process.

- Assure the community that we can manage the grant process inclusively and efficiently.

The program will support experiments to see if…

- Building non-transferable reputation is feasible and viable.

- There are new ways to distribute power

- How we might establish a baseline for contributor engagement data across different platforms and activities.

- It is effective to create awareness of DAO activities and priorities through experience vs. marketing.

Firestarters

Arbitrum's Short-Term Incentive Program (Arbitrum Improvement Proposal)

Program Manager: Disruption Joe

Allocation Amounts: 350K ARB

Status: Open

Description

The Firestarter program is designed to address specific and immediate needs within the DAO. Problems as identified by delegates and Plurality Labs are given a grant to do the initial catherding and research that is required to kickstart action.

Why PL Funded This Program

We started this program due to an acute need which arose after Camelot’s liquidity incentive proposal was voted down. After conducting the first workshop to understand if there was potential for a short term triage solution, we realized that there was no current mechanism to fund the necessary research and facilitation to find a non-polarizing solution.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- STIP Facilitation

- Treasury & Sustainability Working Group

- Security Audit Provider RFP Working Group

The outcomes we hope to achieve are…

- Quickly and effectively address urgent needs resulting in high-quality resolutions.

- Demonstrate the ability to create fast and fair outcomes that benefit the ecosystem.

- Frameworks for service providers to build a scalable foundation for Arbitrum growth.

- High quality resolution of key, immediate needs. better more fair outcomes for the ecosystem, more fair frameworks for service providers speed and fairness.

The program will support experiments to see if…

- Self management and autonomy advance DAO outcomes

- There are new ways to implement checks, balances, and feedback loops between different parts of the system like Plurality labs, the grant security multi-sig, and the DAO.

- Using on-chain payments such as Hedgy can improve operational efficiency. Due to compliance timing, some firestarters were given as direct grants.

MEV (Miner Extractable Value) Research

Program Manager: Puja

Allocation Amounts: 330K ARB

Status: Ends late March or April

Description

This research program focuses on the topic of Miner Extractable Value - MEV. It is a topic where less than 100 people are truly qualified to have high expertise conversations.

Why PL Funded This Program

This program was selected to further the work of Puja Olhaver in creating better mechanisms to propagate quality conversations at the information frontier. This work convenes the top MEV research thinkers in order to be beneficial to Arbitrum as well as the broader Ethereum ecosystem. It is a next step to her prototype design built and tested at Zuzalu.

To improve our resource allocation, we need to bridge the information asymmetries between the scientist/academics and those allocating funds.

Adverse selections & moral hazard are fundamental problems that tokenizing and decentralizing can’t solve on its own. A goal of this program is to elevate the quality of conversations about high-expertise topics and promote breakthroughs using mechanism design.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- Building the MEV research forum

- Conducting research (data gathering and synthesis throughout the program)

- Covering operational costs for hosting a conference with 50 top MEV researchers

The outcomes we hope to achieve are…

- A new forum dedicated to high expertise discussions, pushing the boundaries of decentralized governance and credibility.

- The forum will be a place where qualified voices and thought leaders stand out and the most important discussion get attention in a decentralized way.

- Radically amplify new ideas and technology.

The program will support experiments to see if…

- Exploring using external data to measure social distance

- Considering academic and professional credentials in up and down voting

- Evaluating group thinking

- Using quadratic voting

- Cluster mapping & correlation discounting

Arbitrum Citizen Retro Funding

Program Manager: Zer8

Allocation Amounts: 107K ARB

Status: Completed & payout expected in December ‘23

Description

This round was in support of the outstanding individuals who have contributed to the DAO in a proactive way since the launch in May 2023. In simple terms, we want to reward people, not big organizations, who have helped our community grow by contributing to the DAO. We’re trying to show that hard work should be recognized and rewarded fairly via recognition and ARB.

Why PL Funded This Program

During the execution of STIP, it became clear that many people were putting time and effort into improving the DAO. This program was designed to motivate others to step up by setting a precedent that work for Arbitrum will be rewarded. Many DAOs rely on free labor, some to the point of exploitation, as a normal part of their process.

Arbitrum can set standards that lead the evolution of decentralized governance and technologies by setting an example.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- Making the Arbitrum DAO more efficient

- Supporting long-term success for projects using Arbitrum

- Providing insights & analysis

The outcomes we hope to achieve are…

- Individuals are recognized by the community and awarded ARB for contributing to our growth in a meaningful way, establishing a precedent that meaningful work is fairly and appropriately rewarded.

The program will support experiments to see if…

- Rewarding individuals is more effective than rewarding organizations

- Quadratic funding to is useful in distributing grants retroactively

Allo on Arbitrum Hackathon

Program Manager: Annalise (Buidlbox)

Allocation Amounts: 122.5K ARB

Status: Ends mid January

Description

This program is all about expanding what Gitcoin’s Allo protocol can do on the Arbitrum One Network.New funding strategies, interfaces, and curation modules will be available for all 490 protocols on Arbitrum to use freely to fund what matters.

Why PL Funded This Program

W

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- Our goal is to give the 490+ protocols building on Arbitrum new ways to fund their communities. We want these funding methods to be fully on-chain, transparent, and auditable.

The outcomes we hope to achieve are…

- Fresh funding strategies using the Allo protocol (like direct vs. quadratic approaches)

- Creating modules to clarify and simplify grant round managers’ work

- Customizing solutions using Gitcoin Passport

The program will support experiments to see if…

- Using innovative voting methods to decide how to distribute prizes for the hackathon is effective.

Aribitrum’s “Biggest Small Grants Yet”

Program Manager: Diana Chen

Allocation Amounts: 90K ARB

Status: Ends early March

Description

This program uses Jokerace to give out small grants in a fully on-chain and decentralized way.

Why PL Funded This Program

This experiment in fully decentralized decision making using Jokerace is managed by Diana Chen, host of the Rehashed podcast. As one of Jokerace’ first successful users, Diana created her podcast using Jokerace to select the guests.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- The program will fund a community run mini grants using jokerace for the community to select a grants council who will decide on the winning projects (10,000 ARB will be dispersed each week to 4 of the most deserving projects (2,500 ARB each) as decided by the arbitrumDAO grants council)

The outcomes we hope to achieve are…

- Make governance fun! Achieve governance optimization by identifying and iteratively improving key capabilities to increase DAO performance and accountability

- Community run mini grants initiative

- Support projects building on Arbitrum

- A stronger, more engaged, and more valuable community that is working together toward a shared goal (funding worthwhile web3 projects

The program will support experiments to see if…

- Experimenting making governance fun and properly incentivizing participation, we strengthen not only the bond a community member feels to Arbitrum but also the bond a community member feels to other community members. The compounding effect of this over time is a stronger, more engaged, and more valuable community that is working together toward a shared goal (funding worthwhile web3 projects).

Matching Match Pools on Gitcoin

Program Manager: Zer8

Allocation Amounts: 300K ARB

Status: Open until funds are used. Current 150/300

Description

This program adds extra funds to the matching pool for quadratic funding rounds on Arbitrum. Top programs running on Arbitrum will be selected to receive additional funding.

Why PL Funded This Program

This program was funded to bring users to Arbitrum while also convincing Gitcoin’s Allo protocol and GrantsStack teams to prioritize deployment on Arbitrum. Gitcoin rounds produce gas fees and run on an open data substrate. Their swiss army knife of grants funding mechanisms is now available for any of Arbitrums 490+ protocols to use.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- Protocols building on Arbitrum to improve the

- Encouraging open-source dependencies on Arbitrum

- This includes rounds that were previously evaluated through Gitcoin rounds

The outcomes we hope to achieve are…

- Boost quadratic funding rounds which can generate gas fees on Arbitrum

- Encourage Arbitrum protocols to organize quadratic funding for their communities

- Convert more end users to Arbitrum

The program will support experiments to see if…

- There are new ways to improve matching pools

- We can identify unconventional marketing channels for Arbitrum, especially for Gitcoin to deploy on Arbitrum

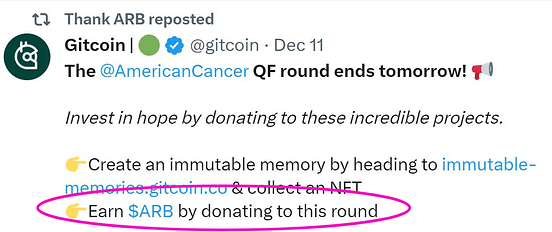

Not only did the American Cancer Society run their first quadratic funding round ever on Arbitrum, we also supported their round by offering tARB & ARB rewards for donating more than $10 to support their cause!

Open Data Community (OCD) Intelligence

Program Manager: Epowell

Allocation Amounts: 165K ARB

Status: Ends late March

Description

The Open Data Community has established a base of data analysts & data scientist hoping to bring about a new paradigm using open data. This program brings experimentation with increasingly decentralized allocation methods leading to new ways of sharing resources and insights.

Why PL Funded This Program

Open data plays an important role in pluralist governance frameworks. The community will be able to tap into a community of data analysts & scientists familiar with onchain forensics to help us understand our ecosystem.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- Data science insights

- Data analysis regarding grant program and grantee evaluation

- Custom scoring mechanisms for grants

- Dashboards for infrastructure

The outcomes we hope to achieve are…

- Encourage the Arbitrum community to create and share tools that help ARbitrum grow.

- Expand the group of data analysts and scientists working with Arbitrum.

- Make community analysis more open and decentralized.

- Deliver practical open-source Sybil and grant analysis, including reviews and program management of the STIP analysis.

- Support critical ad-hoc data needs of the Arbitrum community.

The program will support experiments to see if…

- Exploring permissionless suggestion boxes

- Shaping on-chain mechanisms using Hats protocol

- Hosting on-chain hackathons using Allo voting

Arbitrum Co-Lab by RN DAO

Program Manager: Daniel

Allocation Amounts: 185K ARB

Status: Ends late March

Description

This program explores applying proven methods of deliberation, like Citizens’ Assemblies, Sociocracy 3.0, in the Web3 space and also explores AI-based tools to automate the facilitator’s cycle of asking, synthesizing, and echoing.

Why PL Funded This Program

RN DAO has a reputation for user focused design. Their venture studio model allows us to start the conversation around potential pathways for builders to kick start their idea, access a network of experts to help them grow, then potentially receive investment from Arbitrum DAO. This pathway will provide key learnings into what is needed to make Arbitrum a home for builders.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- Refining the interaction and usability of the Harmonica bot for large groups.

- Crafting and coding LLM prompts for distinct facilitation methods

- Testing these methods with participating communities

The outcomes we hope to achieve are…

- Build collaboration technology that enhances organizational, community, DAO operations and governance tools.

- Improved group decision making and responsive decision making ventures.

The program will support experiments to see if…

- Exploring new ways to apply traditional deliberation methods in the Web3 space is useful, viable and feasible.

- AI-based tools can help scale and automate the facilitator’s cycle facilitation and discovery cycles.

Grantships by DAO Masons

Program Manager: Matt (DAO Masons)

Allocation Amounts: 154K ARB

Status: Ends late February

Description

This program emerged from a Jokerace methods contest regarding grant allocations. It involved building and dogfooding of software which turns grant program allocation into a game the whole DAO can play. Through a series of algorithmic shifts the eligibility and amount of funding for each grant program.

Why PL Funded This Program

Not only did this team win the original Jokerace contest last summer, they also are the only project addressing both continued autonomy through subDAOs which is fully onchain AND meta-allocation.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- Developing software

- Funding testing rounds to iteratively improve the algorithm

- Data analysis

The outcomes we hope to achieve are…

- meta allocation software is built and tested. Community led allocation decisions possible

The program will support experiments to see if…

- community can allocate well at a grant program

- what kind and how many wallets need to participate to produce credible outcomes

- the combination of hats protocol and molochDAO V3 creates emergent effects, novel outcomes and new insights.

Plurality GovBoost

Program Manager: Disruption Joe

Allocation Amounts: 540K ARB

Status: Completed & Paid

Description

This program included direct grants funded to increase Arbitrum DAO ability to effectively govern its resources.

Why PL Funded This Program

We funded this program to address critical governance needs of the DAO which emerged. These grants have specific deliverables and payment is milestone based.

Alignment to Thank ARB Strategic Priorities

The types of projects include…

- Open Source Observer - Grant Impact Evaluation Data

- Open Block Labs - STIP Monitoring

- Blockscience - Governance Needs Assessment

Image from bi-weekly STIP update post from Open Block Labs

The outcomes we hope to achieve are…

- Reduce the potential for fraud

- Increase delegate awareness of known governance best practices

- Inform on framework design

- Enable data-driven decision making

The program will support experiments to see if…

- Data driven approaches to funding grants is effective

- Research to show how to avoid governance capture

Conclusion

While we have a few items still on the todo list for January, this review should provide delegates enough information to assess if we should be offered more or less responsibility for our second Milestone.

We are confident that we will address any open concerns. We are excited to unleash the potential of the Arbitrum ecosystem in 2024.