Retrospective Analysis: Importance of developing Incentive Goals

STIP (~ Nov 2023 - Feb 2024), STIP.b (~ Dec 2023 - March 2024), and LTIPP (~June 3 - Aug 1 2024) have all now come to a close, distributing on the order of ~100M ARB to participants. A flurry of discussion and data analysis around these programs has been ongoing throughout the forum and key working group calls, including before, during, and after the campaigns.

Vending Machine has been reviewing the various discussions around the campaigns both here on the forum and during the weekly calls, and has gathered that one of the key goals of the DAO going forward for future campaigns is to define clear campaign objectives before the campaign begins, and outline the relevant KPIs to measure them. There has already been great work done in this vain, both during and just after STIP (for example here, here, here, here and here), as well as in and around the LTIPP (for example here and here).

The overall goal of this brief report is to summarise key findings across the comprehensive list of conducted research, and focus on the following:

- To give a high level outline of how the setup, organization, design process, parametrization process, operational management, and ongoing monitoring and optimization were conducted throughout the campaigns.

- Outline, based on the already completed analyses by OpenBlock (such as here, here, here and here), Blockworks, Team Lampros Lab DAO, and others, the objectives we believe were achieved with these campaigns, and what KPIs were used to measure them. Note that the campaigns’ objectives in some cases lacked a detailed, research informed, iterative process to arrive at clear definition, specificity, and clarity (though this was improved somewhat for LTIPP, where explicit goals such as user engagement, value generation, and protocol growth across various sectors were explicitly outlined).

Setup and Organization

Though it is not perfectly clear how long discussions were ongoing within the DAO surrounding a launch of an incentive campaign, a call for an Arbitrum Incentives Program Working Group was made in August 2023, stating the need for one because “…of the difficult challenge of balancing the urgent desire to fuel network growth via incentives with the equally important need for responsible delegate oversight and community consensus.” A general framework for what a campaign proposal should entail was given.

The associated AIP, the main launchpoint for STIP, was posted to the forum a month later. In this proposal, goals of the campaign were outlined as such:

- Support Network Growth

- Experiment with Incentive Grants

- Find new models for grants and developer support that generate maximum activity on the Arbitrum network

- Create Incentive Data

A number of constraints on grantees were outlined, covering data requirements for grantees, contract disclosures, no DAO voting with grants, and general good behavior guidelines.

Additionally, it appears that there were some competing opinions within the DAO. Urgency for incentives was also stressed.

Design Process

It seems Arbitrum DAO members and early working groups surrounding STIP were created around June-August of 2023, and with the finalized AIP being posted in September, it is reasonable to conclude that roughly 1-2 months of deliberation went into the designing of STIP.

There seems to be general consensus that both STIP.b and LTIPP were created in quite a quick fashion, due to pressures from various competing interests. Basing this also around forum discussion, it seems likely that around 1 month of deliberation went into the designing of these programs, perhaps more for LTIPP, though both were created and influenced heavily by STIP’s design.

LTIPP had roughly 1-2 months of public filtering/iteration on the design (with perhaps more privately), with many more months of deliberation and protocol discussion/voting in the forum. A number of temperature check snapshot votes happened during this period as designs were iterated on.

In LTIPP applications, clear grant objectives and KPIs were required from protocols, which is closer to a bottom up approach. Many of the outlined objectives coincided with LTIPP’s higher level outlined goals, which included:

- User engagement

- Value generation

- Protocol growth

Parameterization Process

The total rewards for the campaigns were outlined in forum posts and discussed between participants. They were then approved and iterated on through snapshot votes. The consensus among forum discussion participants originally was that 75M ARB was too much for STIP.

Here it is argued 75M was necessary, and protocols were involved in this recommendation as were delegates. A comparison to Optimism’s spend was given. It is unclear whether there was a strong cap or not, but it was stated “delegates will decide whether an amount is excessive given the scope.”

Eventually, after much discussion in the forum, a Snapshot temperature check included 3 budget options, 25M, 50M, 75M. Ultimately, 50M was decided on through Snapshot. A similar vote on incentive size was conducted for LTIPP.

Operational Management

The operational management and difficulties faced throughout these campaigns have been discussed and outlined extensively as pointed out in the introduction. LTIPP made large strides in a positive direction here, with the introduction of smaller more focused delegate committees, and there have also already been large strides in to how the organizational structure should look in the future. As a rough high level summary, the timeline of the operational flow and various working groups and their responsibilities were as follows:

- The Arbitrum incentives Working Group kickstarted discussion around the launch of STIP.

- Program Management responsibilities were transferred over to StableLab in September 2023.

- Conversations between Arbitrum delegates, protocols, and the DAO were ongoing throughout both campaigns.

- ARDC (Blockworks) had some data reporting responsibilities.

- OpenBlock also shared much of the data tracking and reporting responsibilities.

- Arbgrants in conjunction with OpenBlock seemed to manage the data reporting and tracking responsibilities for LTIPP.

- Instead of delegates having to manage many concurring protocol applications and relationships as they did during STIP, more focused committees were developed for LTIPP, which seemed to result in significant operational improvement.

Monitoring and Optimization

Data monitoring, and ensuring funds were being used appropriately, was an explicit priority across all campaigns. Based on discussions in the forum, it was clear many delegates felt overwhelmed by all the responsibility here. Reviewing hundreds of applications and keeping track of nearly just as many protocols quickly became unfeasible.

OpenBlock proposed the framework and to take on responsibility for STIP data monitoring. Throughout STIP, dashboards and bi-weekly updates were provided by OpenBlock. Bi-weekly updates from protocols and final reports from some could be found as well. The same was true for STIP.b.

For LTIPP, protocols were given OBL’s Onboarding Checklist, and protocols were required to comply for the entire life of the program and three months following. Arbgrants was spun up to facilitate this process and to lighten the operational burden that was occurring due to extremely overworked delegates, and to de-clutter the forums. Powerhouse seemed to be the main company behind this development.

Were the Objectives Achieved?

Given the objectives outlined in the launching of the STIP and LTIPP campaigns, we can compare the analysis generated by OpenBlock, Blockworks (ARDC), Lampos, and others against these objectives, to see if and how well they were obtained.

Recall, the stated objectives for STIP were:

- Support Network Growth

- Experiment with Incentive Grants

- Find new models for grants and developer support that generate maximum activity on the Arbitrum network

- Create Incentive Data

and the stated objectives for LTIPP were:

- User engagement

- Value generation

- Protocol growth

STIP

1. Support Network Growth (not achieved)

According to ARDC’s “STIP Analysis of Operations and Incentive Mechanisms” report:

“Overall, during the STIP, Arbitrum’s market share growth across major blockchains peaked at ~0% for TVL, ~5% for spot volume, ~12% for perp volume, and ~0% for loans outstanding. The market shares are currently at around September 2023 values, except for TVL, which is down from ~6% to ~4%.”

2. Experiment with Incentive Grants (achieved)

From OpenBlock’s STIP Incentive Efficacy Analysis: 30 protocols were allocated a share of 50 million ARB tokens.

3. Find new models for grants and developer support that generate maximum activity on the Arbitrum network (not achieved)

Though experimental data was generated, based on the variety of post-incentive campaign discussion from Tnorm and others posted on the forum, it is clear that the models used for the STIP campaign were in need of an upgrade.

It can be argued however, that in moving from STIP to LTIPP, new models for grants and developer support were experimented with. However, STIP, STIP.b, and LTIPP all did employ roughly the same template of handing out incentives to protocols.

4. Create Incentive Data (achieved)

This goal was clearly achieved, given the wealth of post-campaign analysis, including dashboards, more formal research reports, and community/forum discussion.

LTIPP

The findings here are picked from Team Lampros Labs DAO LTIPP Report and Open Block’s LTIPP Efficacy Analysis reports.

1. User Engagement (mixed)

Summarizing the report from Team Lampros Labs: The Long-Term Incentive Pilot Program (LTIPP) on Arbitrum had varying impacts on user engagement across sectors, with the “Quests” sector showing the highest growth in Daily Active Users (DAU). Proprietary incentives were the most successful in driving TVL and engagement, particularly in “Options” and “Oracles.” However, other sectors like “Perpetual” and “Stables/Synthetics” saw limited growth or declines, highlighting the need for more targeted strategies. A significant portion of ARB rewards (47.8%) was used for selling tokens, with other actions like liquidity provision and lending following behind. Unintended behaviors, such as circular transactions and holding rewards without further action, also undermined the program’s effectiveness. Furthermore, users who participated across multiple protocols for short-term rewards, had low governance involvement, which poses challenges for long-term engagement.

Though user engagement post-campaign was not an explicit goal, by looking at many of the graphs in Open Block’s LTIPP Efficacy Analysis, it is clear most metrics have fallen upon program competition, including a lot of capital outflows (except, interestingly, for stablecoins).

2. Value Generation (mixed)

At the start, this objective seems poorly defined. Assuming value generation corresponds to sequencer fees, we can say that the program increased this revenue during the campaign, but now, post campaign, it has fallen to levels below the start of the campaign. It is also unclear whether this additional revenue was simply due to other market factors and unclear whether it can be attributed to LTIPP directly.

Many of the data reports did not explicitly analyze “value generation”, potentially because of its lack of clear definition. Interpreting it as sequencer fee generation, reports tended to not include data in this regard. From Artemis and Token Terminal:

3. Protocol Growth (mixed)

Incentivized DEXs showed superior growth in TVL and fees during LTIPP, exceeding expectations based on TVL and fees on other networks. Yield protocols also saw boosts during the campaign.

However, after the campaign finished, the majority of all relevant protocol growth metrics have fallen.

There were some protocols that seemed to maintain their growth, such as Compound. However, this is mainly because protocols were onboarded due to the LTIPP campaign, and would have had zero growth without the campaign (because they would have never been onboarded).

Therefore, the network objectives and whether they were achieved or not can be summarized in the following table:

| Objective |

Achieved/Not Achieved |

| Support Network Growth (STIP) |

|

| Experiment with Incentive Grants (STIP) |

|

| Find new models for grants and developer support that generate maximum activity on the Arbitrum network (STIP) |

|

| Create Incentive Data (STIP) |

|

| User engagement (LTIPP) |

mixed |

| Value generation (LTIPP) |

mixed |

| Protocol growth (LTIPP) |

mixed |

Moving Forward

(from Arbitrum Incentives Retrospective Presentation)

It is clear from forum discussion, the mixed results of the stated campaign objectives, the lack of standardization around post campaign analyses, and the drop off of KPIs post-campaign, that the stated network objectives may have been misguided.

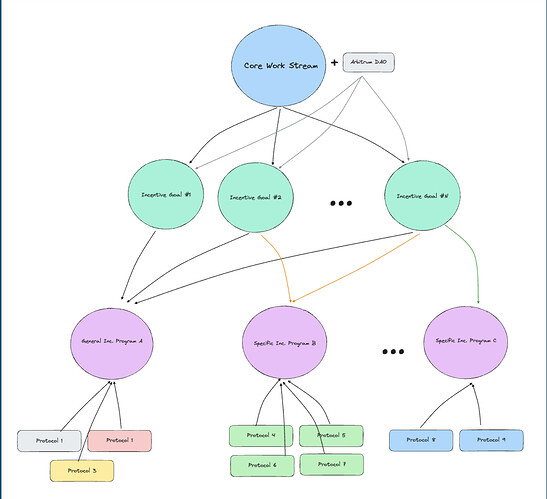

Going forward, it is clear that more time and effort spent on the development of campaign incentive goals will be needed. As has been observed, the campaign incentive goals shape the campaign design, which shape user behavior, and ultimately, shape the impact of these campaigns on the ecosystem as a whole.

Additionally, with more time and effort spent on developing incentive campaign goals, analyzing their success both during and after the campaign will be easier for the associated research labs. This will allow the Arbitrum DAO, key working groups, and delegates to analyze the success of the campaign more effectively.

Disclosure

Vending Machine was not directly involved in the STIP or LTIPP. The analysis presented is based off of the current public reports - if something in this summary report is inaccurate or misrepresented, we invite the community to reach out so we can update this post and improve its accuracy.

Resources

STIP original program outline

STIP.b original program outline

LTIPP original program outline

Proposal to Improve Future Incentive Programs - teddy-notional

Discussion: Reframing Incentives on Arbitrum

Serious People: Proposed KPIs for Arbitrum Grant Programs (LTIPP)

A New Thesis for Network-Level Incentives Programs

Learnings from STIP: Community Interview Summaries and Notes

[RFC] Thoughts on the End-Game Perpetual Incentives Program

Memories of a (grantor) cow: thoughts about incentives program and what could be next

GMX Bi-weekly STIP.b Reports

LTIPP Application Template

OpenBlock Labs STIP Efficacy + Sybil Analysis (2/24)

OpenBlock’s STIP Incentive Efficacy Analysis

OpenBlock realtime dashboards

OpenBlock Arbitrum LTIPP Efficacy Analysis

ARDC Research Deliverables

STIP Analysis of Operations and Incentive Mechanisms

Team Lampros Labs DAO - LTIPP Research Bounty Reports

Arbitrum Incentives Program - Working Group

Tnorm reply on original STIP proposal

Tnorm on STIP ARB Allocation

Comparison to Optimism’s Incentive Spend

50M ARB STIP spend snapshot vote result

StableLab Engagement

ArbGrants

Arbitrum Incentives Retrospective Presentation